Soluciones de prueba de estudiantes

Cuando cree elementos de evaluación en MATLAB® Grader™, defina pruebas que evalúen si la solución de su estudiante cumple los criterios de éxito o no.

Definir pruebas

Para definir pruebas, puede utilizar las pruebas predefinidas disponibles en la lista Tipo de prueba o escribir su propio código de MATLAB. Las opciones de prueba predefinidas incluyen:

Variable igual a la solución de referencia: compruebe la existencia, el tipo de datos, el tamaño y el valor de una variable. Esta opción solo está disponible en la lista Tipo de prueba para evaluaciones de script. A los valores numéricos se les aplica una tolerancia predeterminada de1e-4.Función o palabra clave incluida: compruebe la presencia de funciones específicas o palabras clave.Función o palabra clave no incluida: compruebe que las soluciones de sus estudiantes no incluyen las funciones o palabras clave especificadas.

Por ejemplo, si desea que sus estudiantes utilicen la función taylor para calcular la aproximación de la serie de Taylor de una función determinada, especifique estas opciones:

En la lista Tipo de prueba, seleccione

Función o palabra clave incluida.Especifique la función

taylorcomo la función que debe utilizar su estudiante.Opcionalmente, especifique los comentarios complementarios que recibe su estudiante si no utiliza la función prevista.

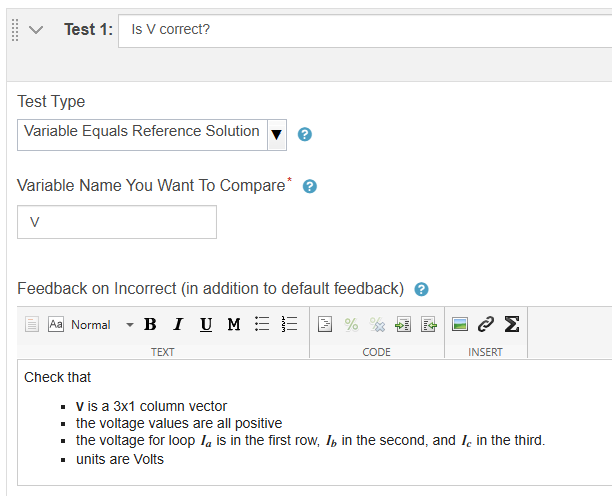

Para una evaluación de script, puede evaluar una variable utilizando el tipo de prueba predefinido, Variable igual a la solución de referencia. Por ejemplo, evalúe si una variable tiene el tamaño adecuado, los valores y el tipo de datos esperados comparándola con la variable del mismo nombre de su solución de referencia.

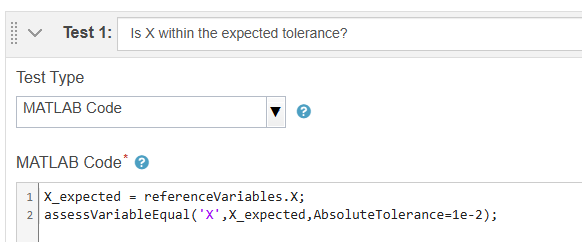

También puede escribir código de MATLAB seleccionando la opción Código de MATLAB en la lista Tipo de prueba. Siga las siguientes directrices:

Evaluación de la exactitud: puede utilizar funciones que corresponden a los tipos de prueba predefinidos:

assessVariableEqual,assessFunctionPresenceyassessFunctionAbsence. Alternativamente, puede escribir una prueba personalizada que devuelva un error para resultados incorrectos.Nombres de variables en evaluaciones de script: para hacer referencia a variables en su solución de referencia, añada el prefijo

referenceVariables, comoreferenceVariables.. Para hacer referencia a las variables de sus estudiantes, utilice el nombre de la variable.myvarNombres de funciones en evaluaciones de funciones: para llamar a la solución de referencia, añada el prefijo

reference, comoreference.. Para llamar a la función de su estudiante, utilice el nombre de la función.myfunctionÁmbito de las variables: las variables que se crean dentro del código de prueba solo existen dentro de la prueba de evaluación.

Por ejemplo, supongamos que su estudiante debe calcular un valor para la variable X que puede variar más que la tolerancia predeterminada. Para comparar el valor de la solución de su estudiante con el valor de su solución de referencia, especifique las variables y la tolerancia llamando a la función assessVariableEqual.

Para probar evaluaciones de función, llame a las funciones de su estudiante y de referencia con un valor de entrada de prueba y compare los valores de salida. Por ejemplo, este código comprueba que una función de su estudiante llamada tempF2C convierte correctamente una temperatura de grados Fahrenheit a Celsius.

temp = 78;

tempC = tempF2C(temp);

expectedTemp = reference.tempF2C(temp);

assessVariableEqual('tempC',expectedTemp);

Sugerencia

El mensaje de error predeterminado de la función assessVariableEqual incluye el nombre de la variable que se ha probado, como X y tempC en los ejemplos anteriores. Para evaluaciones de función, esta variable se encuentra en el script de la prueba de evaluación y no en el código de su estudiante. Utilice nombres significativos que su estudiante pueda reconocer, como una salida en la declaración de la función.

Una misma prueba de evaluación puede incluir varias pruebas. Por ejemplo, este código comprueba que su estudiante implementa una función llamada normsinc que gestiona correctamente tanto valores cero como valores distintos de cero. El caso cero incluye información adicional para sus estudiantes que utilizan el argumento nombre-valor Feedback en la función assessVariableEqual.

nonzero = 0.25*randi([1 3]); y_nonzero = normsinc(nonzero); expected_y_nonzero = reference.normsinc(nonzero); assessVariableEqual('y_nonzero',expected_y_nonzero); y_zero = normsinc(0); expected_y_zero = reference.normsinc(0); assessVariableEqual('y_zero',expected_y_zero, ... Feedback='Inputs of 0 should return 1. Consider an if-else statement or logical indexing.');

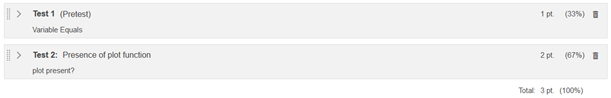

Dar una puntuación parcial

De forma predeterminada, el software considera que una solución es correcta si se superan todas las pruebas y la considera incorrecta si se suspende alguna. Para dar una puntuación parcial, asigne ponderaciones relativas a las pruebas cambiando Método de puntuación a Ponderado. MATLAB Grader calcula el porcentaje de cada prueba de acuerdo con el total de las ponderaciones relativas, por lo que puede definir las ponderaciones como puntos o porcentajes.

Por ejemplo, si se establece la ponderación relativa de todas las pruebas en 1, cada prueba tiene la misma ponderación. Establecer algunas ponderaciones en 2 hace que la ponderación de esas pruebas sea el doble que las de las pruebas con una ponderación establecida en 1.

Alternativamente, puede introducir porcentajes para las ponderaciones.

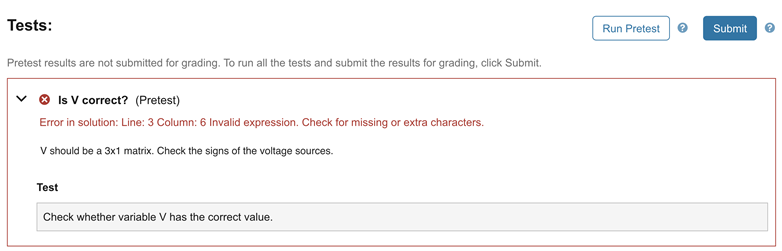

Especificar pruebas previas

Las pruebas previas son pruebas de evaluación que sus estudiantes pueden realizar para determinar si su solución va por buen camino antes de enviarla para su calificación. Considere la posibilidad de utilizar una prueba previa para guiar a sus estudiantes cuando haya varios enfoques correctos, pero la prueba requiera un enfoque concreto o cuando establezca un límite de intentos para realizar la tarea.

Las pruebas previas difieren de las pruebas de evaluación comunes en estos aspectos:

Los resultados de las pruebas previas no se registran en el libro de notas antes de su envío.

La ejecución de pruebas previas no cuenta en lo que respecta al límite de intentos.

Sus estudiantes pueden ver el código y los detalles de las pruebas previas, incluida la salida de las pruebas con código de MATLAB, independientemente de si la prueba se supera o no. Asegúrese de que la prueba previa no incluya la solución de la evaluación.

Al igual que las pruebas de evaluación comunes, las pruebas previas se ejecutan cuando sus estudiantes envían sus soluciones y contribuyen a la calificación final.

Por ejemplo, supongamos que sus estudiantes deben definir un sistema de ecuaciones lineales que se puede organizar de varias formas. Defina una prueba previa para asegurarse de que la solución de su estudiante tiene el orden y los coeficientes que se esperan.

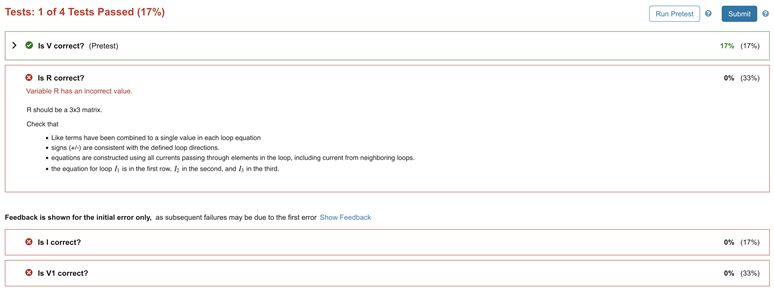

Sus estudiantes observan un error en una prueba que han suspendido y pueden modificar la solución.

Controlar la visualización de errores para una evaluación de script

En una evaluación de script, un error inicial puede provocar errores posteriores. Puede animar a sus estudiantes a centrarse primero en el error inicial.

Si se selecciona la opción Mostrar comentarios solo para error inicial, aparecen comentarios detallados del error inicial, pero se ocultan para los errores posteriores, de forma predeterminada. Su estudiante puede mostrar estos comentarios adicionales haciendo clic en Mostrar comentarios.