Read ROS 2 Image Messages in Simulink® and Perform Registration using Feature Matching

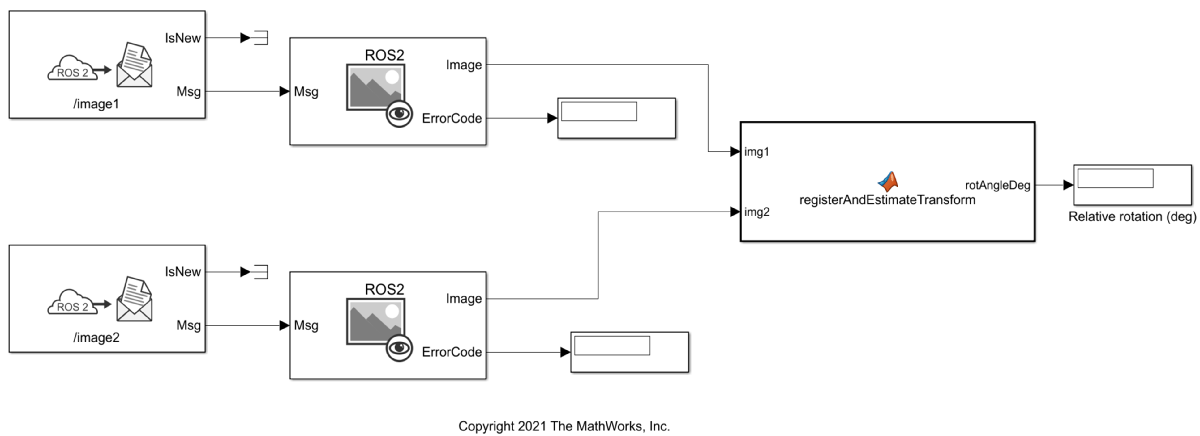

This example shows how to read ROS 2 sensor_msgs/Image messages in Simulink® and perform image registration using feature matching to estimate the relative rotation of one image with respect to the other. This example requires Computer Vision Toolbox®.

Set Up ROS 2 Network

Load two sample sensor_msgs/Image messages, imageMsg1 and imageMsg2. Create a ROS 2 node with two publishers to publish the messages on the topics /image_1 and /image2. For the publishers, set the quality of service (QoS) property Durability to transientlocal. This ensures that the publishers maintain the messages for any subscribers that join after the messages have already been sent. Publish the messages using the send function.

load('ros2ImageMsgs.mat'); node = ros2node('/image_test'); imgPub1 = ros2publisher(node,'/image1','sensor_msgs/Image',Durability='transientlocal',Depth=2); imgPub2 = ros2publisher(node,'/image2','sensor_msgs/Image',Durability='transientlocal',Depth=2); send(imgPub1,imageMsg1); send(imgPub2,imageMsg2);

Read messages from ROS 2 Network in Simulink and Perform Image Registration

Open the model.

modelName = 'readImageAndEstimateTransformationExampleModel.slx';

open_system(modelName);

Ensure that the two Subscribe blocks are connected to the topics, /image1 and /image2. The value of the Durability QoS parameter of the Subscribe blocks is Transient local. This ensures compatibility with the QoS settings of the publishers that you configured in the previous section. The messages from the subscribers are fed to the Read Image blocks to extract the image data, which is then fed to the registerAndEstimateTransform MATLAB Function block to perform registration using feature matching.

Under Simulation tab, select ROS Toolbox > Variable Size Messages. Notice that the Use default limits for this message type is clear. Then, in the Maximum length column, verify that the data property of the sensor_msgs/Image message has a value greater than the number of pixels in the image (921600). Run the model.

sim(modelName);

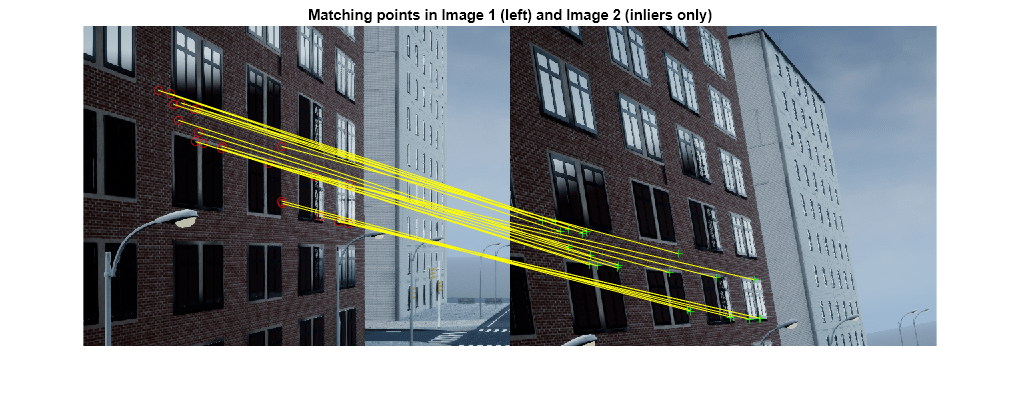

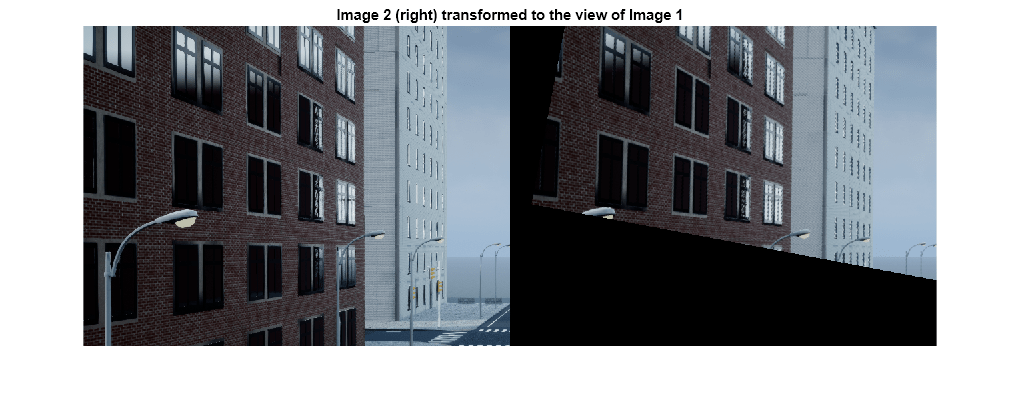

The model performs image registration, estimates the 2D transformation and displays the relative rotation (deg) of the image published on /image2 topic, with respect to that on /image1. It also visualizes the two images along with the matched inlier points and the /image2 image transformed into the view of the /image1 image.

MATLAB function for Image Registration using Feature Matching

This model uses the algorithm in the ExampleHelperRegisterAndEstimateTransform MATLAB Function to perform image registration and the relative transform estimation, consisting of these steps:

Detect and extract ORB features and corresponding valid points in the two images using

detectORBFeatures(Computer Vision Toolbox) andextractFeatures(Computer Vision Toolbox).Find candidate matches and collect the actual corresponding point locations from both the images using

matchFeatures(Computer Vision Toolbox).Estimate the relative geometric transformation between the two images along with inliers using

estimateGeometricTransform2D(Computer Vision Toolbox), which implements the M-estimator sample consensus (MSAC), a variant of the random sample consensus (RANSAC) algorithm.Compute and output the relative rotation from the estimated geometric transformation.

For more information about local feature detection and extraction such as different kinds of feature detectors, see Local Feature Detection and Extraction (Computer Vision Toolbox). Feature matching is also a key initial step in Visual SLAM applications. For an overview of the Visual SLAM workflow using MATLAB™, see Implement Visual SLAM in MATLAB (Computer Vision Toolbox).

function rotAngleDeg = ExampleHelperRegisterAndEstimateTransform(img1,img2) %% %#codegen % Declare functions not supported for code generation as extrinsic coder.extrinsic('extractFeatures'); coder.extrinsic('showMatchedFeatures') coder.extrinsic('imshowpair'); % Initialize the transform tform = affine2d(single(eye(3))); %% Feature Detection and Extraction % Convert images to grayscale Igray1 = rgb2gray(img1); Igray2 = rgb2gray(img2); % Detect ORB features in two images pointsImage1 = detectORBFeatures(Igray1,'ScaleFactor',1.2,'NumLevels',8); pointsImage2 = detectORBFeatures(Igray2,'ScaleFactor',1.2,'NumLevels',8); % Select 1500 uniformly distributed points pointsImage1 = selectUniform(pointsImage1,1500,size(Igray1,1:2)); pointsImage2 = selectUniform(pointsImage2,1500,size(Igray2,1:2)); % Extract features from the two images [featuresImage1, validPointsImage1] = extractFeatures(Igray1,pointsImage1); [featuresImage2, validPointsImage2] = extractFeatures(Igray2,pointsImage2); %% Feature Matching % Find candidate matches indexPairs = matchFeatures(featuresImage1,featuresImage2,'Unique',true,'MaxRatio',0.6,'MatchThreshold',40); sz = size(indexPairs); % Find the matched feature point locations in both images matchedPointsImage1 = validPointsImage1(indexPairs(1:sz(1),1)); matchedPointsImage2 = validPointsImage2(indexPairs(1:sz(1),2)); %% Transform estimation % Estimate the transformation matrixbetween the two images based on matched points [tform,inlierIdx] = estimateGeometricTransform2D(matchedPointsImage2,matchedPointsImage1,'similarity'); % Compute the rotation angle from the transformation matrix cosVal = tform.T(1,1); sinVal = tform.T(2,1); rotAngleDeg = atan2d(sinVal,cosVal); % Remove all the outliers inliersImage1 = matchedPointsImage1(inlierIdx); inliersImage2 = matchedPointsImage2(inlierIdx); %% Visualize matched inliers and transformed image figure % subplot(2,1,1) showMatchedFeatures(img1,img2,inliersImage1,inliersImage2,'montage'); title('Matching points in Image 1 (left) and Image 2 (inliers only)') figure % subplot(2,1,2) outputView = imref2d(size(img1)); img3 = imwarp(img2,tform,'OutputView',outputView); imshowpair(img1,img3,'montage'); title('Image 2 (right) transformed to the view of Image 1') end

See Also

Topics

- Read A ROS Image Message In Simulink

- Local Feature Detection and Extraction (Computer Vision Toolbox)