Detect and Track Face Using Raspberry Pi Pan Tilt HAT

This example shows how to use the Pan Tilt HAT block from Simulink® Support Package for Raspberry Pi® Hardware to detect and track a face in a video frame. The example also tracks a face when a person tilts their head either left or right or moves toward or away from the camera. If the face is not visible or goes out of focus, the model tries to reacquire the face and then tracks the face. The model in this example can detect and track only one face at a time. You can also enable the proportional-integral-derivative (PID) controller to achieve a smooth motion of the pan and tilt hardware module for tracking the face.

Prerequisites

Download and install OpenCV version 4.5.2 or higher

For more information on how to use Simulink Support Package for Raspberry Pi Hardware, see Get Started with Simulink Support Package for Raspberry Pi Hardware

Required Hardware

Raspberry Pi board

Power supply with at least 1A output

Pan Tilt HAT hardware module

Camera board

Hardware Setup

Mount the Pan Tilt HAT hardware module on your Raspberry Pi board.

Mount the camera board to your pan and tilt hardware module.

Connect the CSI cable of the camera board to the CSI port on the Raspberry Pi. For more information, refer to Camera Module.

Configure and calibrate the pan tilt hardware module. For more information on how to configure and calibrate the pan and tilt hardware module, see Configure and Calibrate Pan Tilt Hardware Using Raspberry Pi Pan Tilt HAT.

Configure Simulink Model and Calibrate Parameters

Open the raspberrypi_pantilthat_face_tracking Simulink model.

Input Capture and Conversion

Use the V4L2 Video Capture block to capture live video using the camera connected to the Raspberry Pi hardware board. The Convert RGB to Image subsystem converts the RGB image components to a single data type for further processing. Use the toggle switch to enable or disable the PID controllers for setting the pan and tilt angles for face tracking.

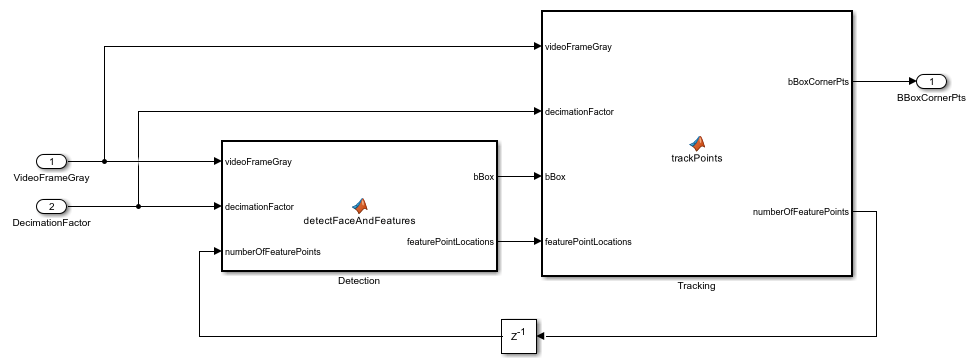

Algorithm to Detect and Track Face

The Detection and Tracking subsystem uses the video frame with the detected face to create a bounding box and feature points for the face.

The vision.CascadeObjectDetector System object™ detects the location of the face in the captured video frame. The cascade object detector uses the Viola-Jones detection algorithm and a trained classification model for detection. After the face is detected, facial feature points are identified using the Good Features to Track method proposed by Shi and Tomasi. The vision.PointTracker System object tracks the identified feature points by using the Kanade-Lucas-Tomasi (KLT) feature-tracking algorithm. For each point in the previous frame, the point tracker attempts to find the corresponding point in the current frame. The estimateGeometricTransform2D function then estimates the translation, rotation, and scale between the old points and the new points. The function then applies this transformation to the bounding box around the face.

Although, it is possible to use the cascade object detector on every frame, it is computationally expensive to do so. This technique can also at times fail to detect the face, such as when the subject turns or tilts their head. This limitation results from the type of trained classification model used for detection. In this example, you detect the face once, and then the KLT algorithm tracks the face across the video frames. The detection is performed again only when the face is no longer visible or when the tracker cannot find enough feature points.

The Dynamic memory allocation functionality in MATLAB® enables you to use the System objects and functions in the MATLAB Function block.

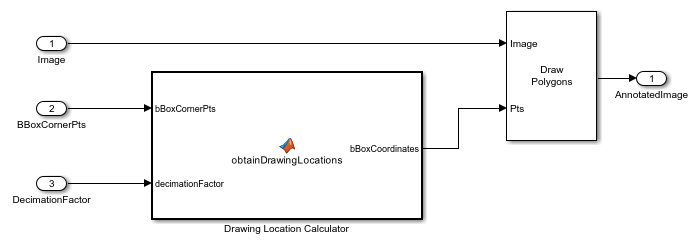

Display with Bounding Box on Face

The Draw Annotations subsystem inserts a rectangle around the corner points of the bounding box.

The Draw Shapes block draws the bounding box corner points.

Algorithm to Move, Pan, and Tilt HAT

Using the Pan and Tilt Hat Logic subsystem, you can either enable or disable controlling the pan and tilt servo mechanism using a PID controller. Enabling the PID controller ensures smooth transition between the servo mechanisms and improves face tracking.

The Pan and Tilt Hat Logic subsystem receives the following four inputs:

Bounding box corner points — Rectangular bounding box coordinates for the detected face

Decimation factor — Downsample the input image by the value specified in the Decimation Factor Constant block to fit the bounding box around the face

Image resolution — Resolution of the input image

With PID — Input 1 for enabling PID controller, input 0 for disabling PID controller

Without using the PID controller for tracking the face, the Pan and Tilt Angle MATLAB function block inside the Simple Pan and Tilt Logic subsystem calculates the centroid of an input sample image from the bounding box coordinate values. According to these values, the pan and tilt hardware module moves along the X- and Y-axes to center the face on the SDL Video Display window. The algorithm calculates the pan and tilt angles for the value sample of an input frame you set in the skipSample parameter in the Pan and Tilt angle Function. In this example, skipSample parameter is set to 3. The value you set in the decrementOvershoot parameter in the Pan and Tilt Angle MATLAB function block, reduces overshooting in the pan and tilt angle calculation.

Using the PID controller for tracking the face, the Face and Image Centroid Difference MATLAB function block inside the Pan and Tilt Logic with PID subsystem calculates the centroid of an input image from the bounding box coordinate values. The MATLAB Function block then calculates the error lengths for both the X- and Y-axes. The PID Controller blocks use the error lengths as inputs. The Pan and Tilt Angle MATLAB function block calculates the angle for moving the pan and tilt servo motor. These angles provide a smooth movement for tracking the face and centering it in the SDL Video Display window.

Before you run the Simulink model, face towards the camera and ensure that your face appears approximately at the center of the SDL Video Display window. This makes sure that your face is tracked accurately by the pan and tilt hardware module.

Run Simulink Model in Connected IO Mode

On the Hardware tab of the Simulink model, in the Mode section, click Run on board and then select Connected IO from the drop-down list. In the Run on Computer section, click Run with IO.

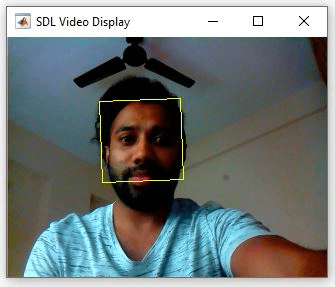

Capture your face using the camera. The Simulink model detects your face and draws a bounding box around it.

Observe that when you tilt your head or move away or towards the camera, the Simulink model tracks the face. Note that the Pan Tilt HAT model can track only horizontal and vertical movements from the center of the image. Any other movements can result in inaccurate tracking.

Toggle the PID switch in the Input Capture and Conversion area and observe the output in the SDL Video Display block.

Deploy Simulink Model on Raspberry Pi Board

On the Hardware tab of the Simulink model, in the Mode section, click Run on board and then select Run on board from the drop-down list. In the Deploy section, click Build, Deploy & Start.

Observe that when you tilt your head or move away or towards the camera, the Simulink model tracks your face.

Toggle the PID switch in the Input Capture and Conversion area and observe the output in the SDL Video Display block.

See Also

References

Viola, Paul A., and Michael J. Jones. "Rapid Object Detection using a Boosted Cascade of Simple Features", IEEE CVPR, 2001.

Lucas, Bruce D., and Takeo Kanade. "An Iterative Image Registration Technique with an Application to Stereo Vision." International Joint Conference on Artificial Intelligence, 1981.

Lucas, Bruce D., and Takeo Kanade. "Detection and Tracking of Point Features." Carnegie Mellon University Technical Report CMU-CS-91-132, 1991.

Shi, Jianbo, and Carlo Tomasi. "Good Features to Track." IEEE Conference on Computer Vision and Pattern Recognition, 1994.

ZKalal, Zdenek, Krystian Mikolajczyk, and Jiri Matas. "Forward-Backward Error: Automatic Detection of Tracking Failures." International Conference on Pattern Recognition, 2010