Resultados de

Share your ideas, suggestions, and wishlists for improving MathWorks products. What would make the software absolutely perfect for you? Discuss your idea(s) with other community users.

Guidelines & Tips

We encourage all ideas, big or small! To help everyone understand and discuss your suggestion, please include as much detail as possible in your post:

- Product or Feature: Clearly state which product (e.g., MATLAB, Simulink, a toolbox, etc.) or specific feature your idea relates to.

- The Problem or Opportunity: Briefly describe what challenge you’re facing or what opportunity you see for improvement.

- Your Idea: Explain your suggestion in detail. What would you like to see added, changed, or improved? How would it help you and other users?

- Examples or Use Cases (optional): If possible, include an example, scenario, or workflow to illustrate your idea.

- Related Posts (optional): If you’ve seen similar ideas or discussions, feel free to link to them for context.

Ready to share your idea?

Click here and then "Start a Discussion”, and let the community know how MATLAB could be even better for you!

Thank you for your contributions and for helping make MATLAB Central a vibrant place for sharing and improving ideas.

We are excited to announce the first edition of the MathWorks AI Challenge. You’re invited to submit innovative solutions to challenges in the field of artificial intelligence. Choose a project from our curated list and submit your solution for a chance to win up to $1,000 (USD). Showcase your creativity and contribute to the advancement of AI technology.

Watch episodes 5-7 for the new stuff, but the whole series is really great.

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™!

Read about it here:

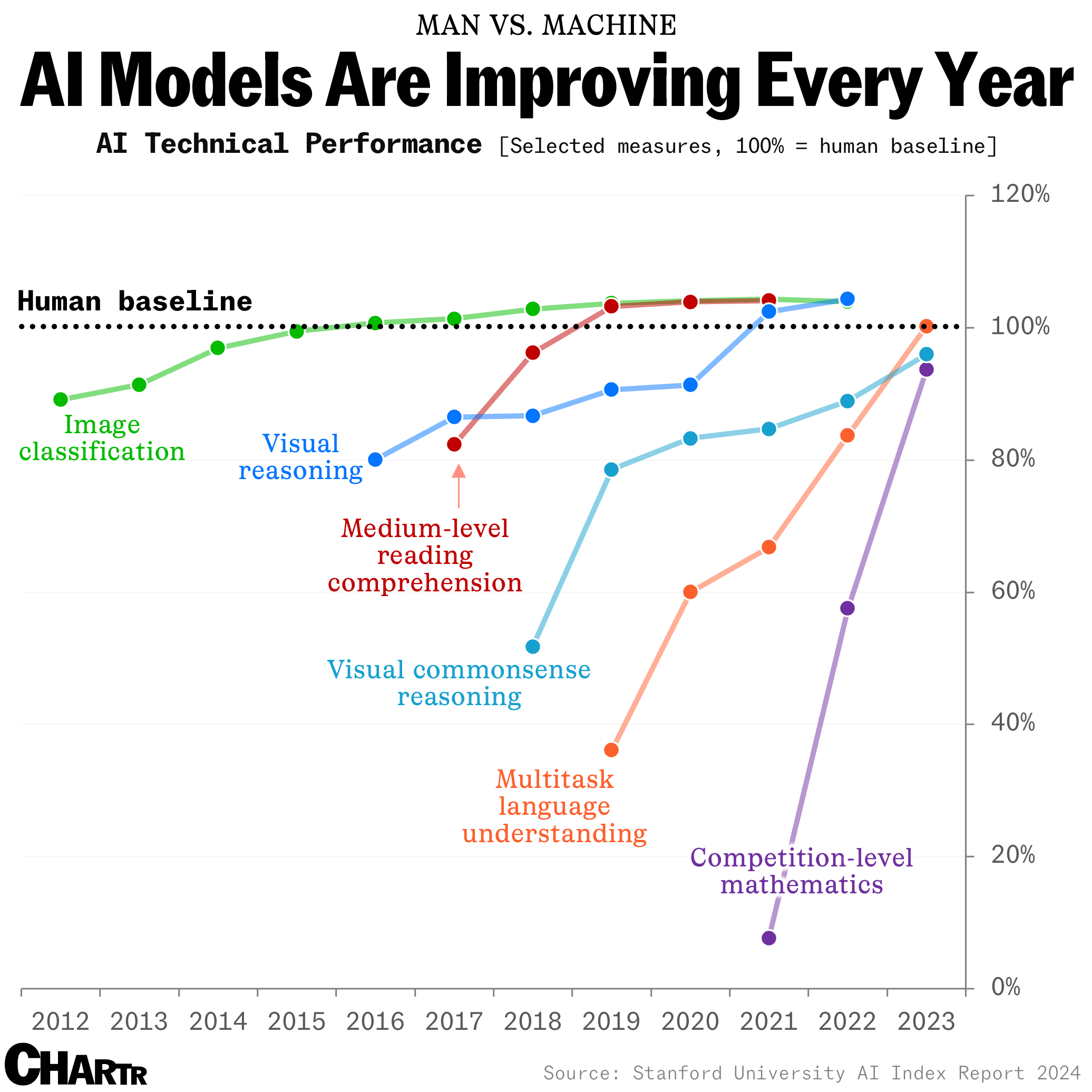

How long until the 'dumbest' models are smarter than your average person? Thanks for sharing this article @Adam Danz

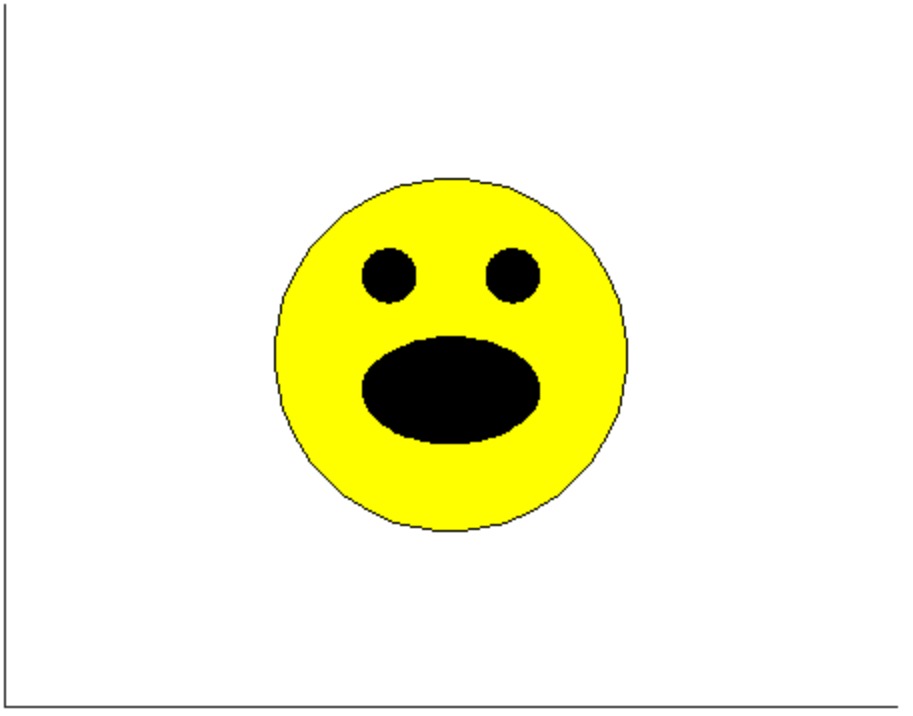

I was in a meeting the other day and a coworker shared a smiley face they created using the AI Chat Playground. The image looked something like this:

And I suspect the prompt they used was something like this:

"Create a smiley face"

I imagine this output wasn't what my coworker had expected so he was left thinking that this was as good as it gets without manually editing the code, and that the AI Chat Playground couldn't do any better.

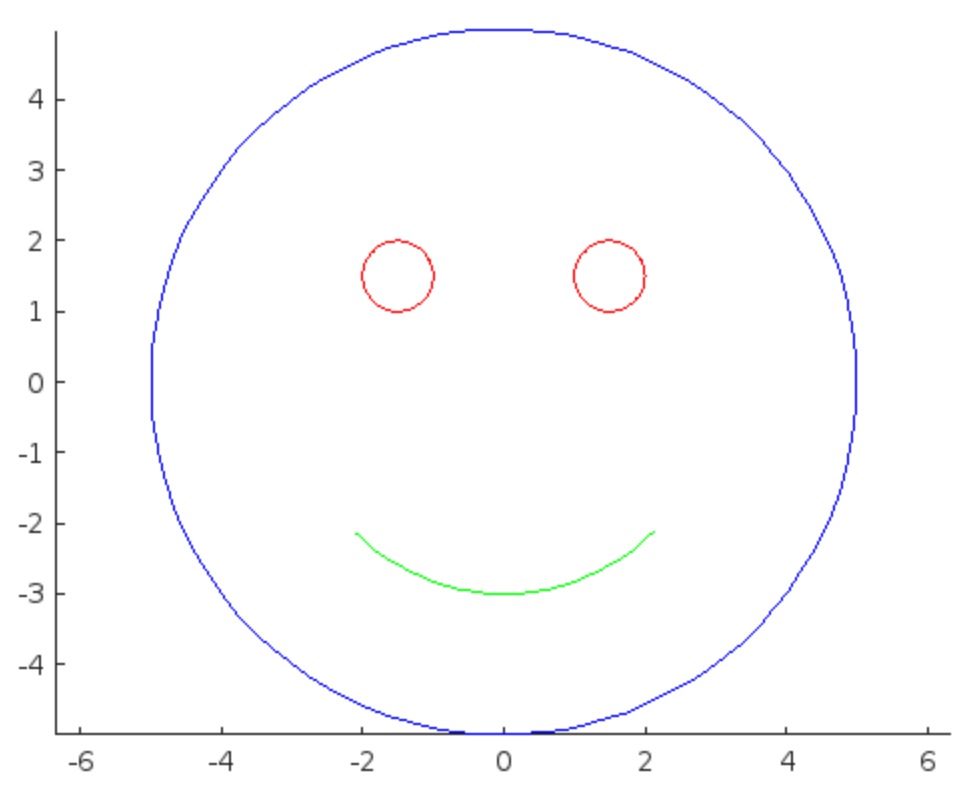

I thought I could get a better result using the Playground so I tried a more detailed prompt using a multi-step technique like this:

"Follow these instructions:

- Create code that plots a circle

- Create two smaller circles as eyes within the first circle

- Create an arc that looks like a smile in the lower part of the first circle"

The output of this prompt was better in my opinion.

These queries/prompts are examples of 'zero-shot' prompts, the expectation being a good result with just one query. As opposed to a back-and-forth chat session working towards a desired outcome.

I wonder how many attempts everyone tries before they decide they can't anything more from the AI/LLM. There are times I'll send dozens of chat queries if I feel like I'm getting close to my goal, while other times I'll try just one or two. One thing I always find useful is seeing how others interact with AI models, which is what inspired me to share this.

Does anyone have examples of techniques that work well? I find multi-step instructions often produces good results.