Data Preprocessing for Deep Learning

From the series: Perception

In this video, Neha Goel and Connell D’Souza will go over the different steps required to prepare a dataset to be used in designing object detection deep learning models.

First, Neha demonstrates how to resize and randomly sample images to create three datasets for training, validation, and testing and discusses the importance of this step.

Next, the Ground truth Labeler app is discussed for data labeling. You can use the ground truth labeler app or Video Labeler app to automate data labeling using either built-in automation algorithms or custom automation algorithms. Once the ground truth has been generated, preparing this data for training neural network is also discussed.

Resources:

Published: 3 Jan 2020

Hello and welcome to another episode of the MATLAB and Simulink Robotics Arena. Over the course of the next few videos, we're going to show you how to go from labeling data to training a YOLOv2 deep learning detector and deploying it to an NVIDIA Jetson TX1. So to get things started off, I've got I've got Neha with me. Neha is the new deep learning person on our student competition team. Hello, Neha. I believe this is your first Robotics Arena video, right?

Yes.

Oh, well, welcome to the Robotics Arena.

Thank you.

So Neha is going to help us go over what are the different steps involved with labeling and training and deploying deep learning detectors for your applications.

OK, let's start diving into the first part of our workflow, which is data preprocessing. For the purposes of this demo and these few videos, we've got a data set our friends from the robotics association at Embry-Riddle and their RoboSub team were kind enough to provide us with that we have gone and posted up to this Google Drive repository that you can download and get started with the video.

So once you go up to Google Drive and download the folders, you should see a folder structure like this show up in your current folder. We want you to have it this way because our code is built to work with these kind of folders. We can take a look at some of the images that were provided to us. So if we go ahead and open this outside MATLAB, what we see here is pictures of buoys from the RoboSub competition. So for those who are taking part in RoboSub, they are pretty familiar with these kind of elements. Our data set contains multiple images like this.

All right, so let's go ahead and dive into our data preprocessing step in the workflow. So you want to download the data. You want to make sure that it's in that RoboSubFootage folder, and that folder structure that we spoke about.

The next step that's involved in data preprocessing is image resizing. So Neha, I know in the process of us developing this demo, we spent a lot of time actually resizing the images. Can you tell us why image resizing is important for deep learning?

Yes, so basically, we do image resizing in mostly every network. But here, we are using YOLOv2 architecture. OK so YOLOv2 is designed in such a way that it works best with the image size of 416 by 416 by 3. And what images we got-- it was a very high resolution images, and with more than 1063, 1063, 3. And so we resize the images to 416 by 416 by 3 so that YOLOv2 architecture plays best with these images. And also, later on, we'll be talking about, in the deployment phase, that our output from the camera should also be of the same image size.

Because that's the size that your network is expecting.

Yeah.

So what would happen if we gave the network a larger size image?

So, first, you would see-- when I was doing this, I also observed this thing, that your network will not run perfectly. Reason being, the YOLOv2 architecture also have the concept of anchor boxes that I will be talking about in the next video. So that anchor boxes are also affected due to the size of the video. So YOLOv2 architecture best performs with this size of the image.

And so the data set that we've provided you through that Google Drive link has already been resized. We've done that to make it easier for us to share because then the size of the data isn't as large. So we don't necessarily need to run this section of code for this particular demonstration for our data that we have. But this is a step that you would have to take when you're designing your own networks and working with your own data sets.

The next step that we go through is actually splitting the folders. Now for those of you all that are familiar with deep learning, you know that we need to have we need to have multiple data sets on which one to train, and then validation. So is there a particular method to this splitting folders madness going on here?

Yeah, so the best way-- so the concept why we split the folders is because we don't want the network to-- like during our testing phase, we don't want a network to see all the data that we already have. So we train on a training set, and then we divide into testing and validation. So validation set, we work with, like, if you want to change the parameters or if you want to do any experiment with our network.

Once we are happy with the validation set, testing set is the set that goes at the end, the last phase, so that we can see, OK, how our network is good with the unseen images. And here, what we are doing, we are randomly dividing the data set with the random images so that every time it ran, it also shuffles the images and gives a random output to the .

So basically, it's helping make sure that your data-- your network is not getting fit to a particular data set.

Yes.

OK, awesome. So once we've assembled our data into training, testing, and validating folders-- again, the data that we are sharing with you all has already been arranged in those particular folders. The next thing you want to do is you want to create ground truth. Ground truth is-- and we've done some videos on this in the past, and I'm not going to spend a whole lot of time on this.

But basically, what ground truth is-- ground truth is results that you have made through observation. So in our particular case, it would mean going into all those images and actually having labels on them. So what we have done is if I run this section of code, I'm going to show you a ground truth labeling session that we've used.

So what we see in here is this is our entire training folder, and we've gone ahead and labeled these. If you see, what we've used here is we've used a few custom automation algorithms to label these images. There are some other videos in the description that you can take a look at on how we actually did all this.

But if we go and step through the images, we'll see that we've got a bunch of labeled images with the objects of interest. So at the end of this, at the end of these four videos, we're going to create a multi-class object detector that can identify things like navigates and the different buoys, the labels of which you can see up here.

Quickly, as I walk through this code, we see that we're launching the labeler app with this prelabeled session in there. In there, you can go and open it up on your own time to see what it looks like. And I've also added some information here on how you can use these custom automation algorithms. Again, there are other videos on this that you can take a look at. But this is just code that you can use for that particular process.

Now once we're done with labeling our entire data set, we can go and export these labels as a ground truth data object. So we can go ahead and export them to the workspace. Let's just give it the ground truth name for now. And if you go back into MATLAB, we should see our workspace now contains a ground truth data object.

So what is a ground truth data?

So the ground truth data object is a special MATLAB data object which holds a bunch of information, some of it including the source locations for all the data that we have. So that would be our RoboSubFootage folder, as well it also contains information about the different kinds of labels, so label descriptions, as well as the actual bounding box locations of the labels in each image. So there's a lot of useful information there that we're going to use further down the road to actually go ahead and train our detector.

So part of preparing that data for us to actually train the detector is to convert the ground truth object into training, validation, and testing data. Now our MATLAB functions our MATLAB training functions, we'll see in the next video, accept data in a particular format. So if we go ahead and run this code, you'll see what I mean.

The meat of this code is this objectDetectorTrainingData function. And what this does is it takes in your ground truth object that you've created, and it basically samples a particular amount of data depending on this SamplingFactor value that you put in here. And it will create this table. So we've got a trainingData table.

If we scroll through this, we see that it's got the imageFilename. So this is the actual location, and the different labels and bounding boxes that we have. So as you can see, for the first few images, we only have navigates, which sort of reflects what we saw earlier in the app. And then, as you scroll through, you'll see that there'll be bounding boxes for the other kinds of labels as well.

One thing that you need to keep in mind is the ground truth data object stores your source locations as absolute paths. So in our case, since we've actually developed all of this code on this computer that we're recording on it does not throw us an error. But when you try to use this code, because the ground truth data object that we've loaded have been saved with absolute paths for our computer, you have to adjust those paths for your own computer.

And to help you with this, we've got this adjustGroundTruthPaths file. So I highly recommend the first time that you download this code and run it, just make sure that you run this file to start, which will help you adjust all the paths for your computer.

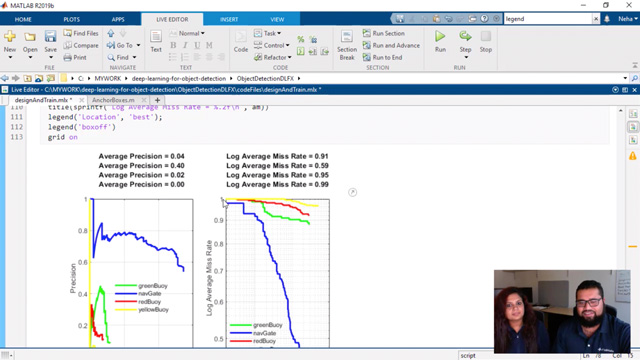

So now that we've run this section. We see that we have three tables saved in our workspace-- the TestData, trainingData, and ValidationData. In the next video, we're actually going to go ahead and use these tables to train our detector. So stick around for the next video. In the meantime, if you need any help, you can get in touch with us through our Facebook group and our email address. So stay tuned for another episode, and we'll see you soon.