pitchnn

Syntax

Description

f0 = pitchnn(audioIn,fs,Name,Value)Name,Value arguments. For

example, f0 = pitchnn(audioIn,fs,'ConfidenceThreshold',0.5) sets the

confidence threshold for each value of f0 to

0.5.

[

returns the activations of a CREPE pretrained network.f0,loc,activations] = pitchnn(___)

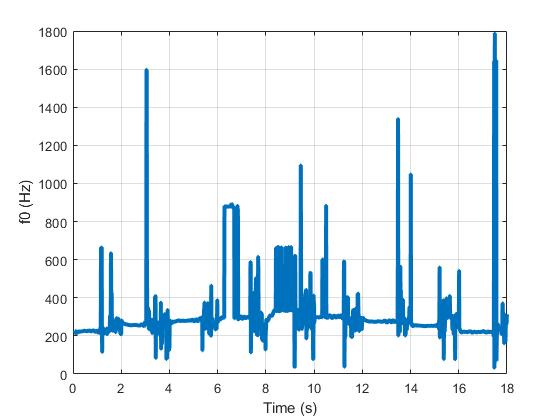

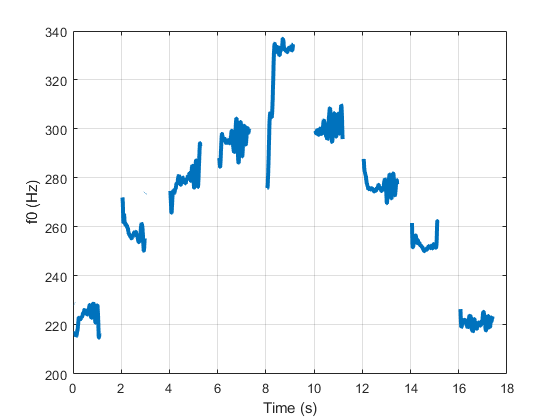

pitchnn(___) with no output arguments plots the

estimated fundamental frequency over time.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

References

[1] Kim, Jong Wook, Justin Salamon, Peter Li, and Juan Pablo Bello. “Crepe: A Convolutional Representation for Pitch Estimation.” In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 161–65. Calgary, AB: IEEE, 2018. https://doi.org/10.1109/ICASSP.2018.8461329.

Extended Capabilities

Version History

Introduced in R2021a