Automate Testing for Forward Vehicle Sensor Fusion

This example shows how to automate testing of a forward vehicle sensor fusion algorithm and its generated code by using Simulink® Test™. In this example, you:

Assess the behavior of a sensor fusion and tracking algorithm on different test scenarios with different test requirements.

Automate testing of the sensor fusion and tracking algorithm and the generated code for the algorithm.

Introduction

The sensor fusion and tracking algorithm is a fundamental perception component of an automated driving application. The tracker analyzes the sensor data and tracks the objects on the road. You can design, simulate, and evaluate the performance of a sensor fusion and tracking algorithm using MATLAB® and Simulink®. You can define system requirements to configure test scenarios for simulation. You can integrate the sensor fusion and tracking algorithm in an external software environment and deploy it to a vehicle through C++ code generation. Code generation and verification of the Simulink model ensures functional equivalence between simulation and real-time implementation. Automatically running these simulations enables regression testing to verify system-level functionality.

For information about how to design a sensor fusion and tracking algorithm, see the Forward Vehicle Sensor Fusion example. This example shows how to automate testing the sensor fusion and tracking algorithm against multiple scenarios using Simulink Test. The scenarios are based on system-level requirements. In this example, you:

Review requirements — Explore the test scenarios and review the requirements that describe the test conditions.

Review the test bench model — Review the forward vehicle sensor fusion test bench model, which contains metric assessments. These metric assessments integrate the test bench model with Simulink Test for automated testing.

Disable runtime visualizations — Disable runtime visualizations to reduce execution time for automated testing.

Automate testing — Configure the test manager to simulate each test scenario, assess success criteria, and report the results. You can explore the results dynamically using the test manager and export to a PDF for external reviews.

Automate testing with generated code — Configure the forward vehicle sensor fusion component to generate C++ code. Run automated tests on the generated code and verify behavior.

Automate testing in parallel — Increase overall execution speed for the tests by using parallel computing on a multicore computer.

In this example, you enable system-level simulation through integration with the Unreal Engine® from Epic Games®.

if ~ispc error(['This example is only supported on Microsoft', ... char(174), ' Windows', char(174), '.']); end

Review Requirements

This example provides 11 test scenarios for evaluating the model. To define the high-level testing requirements for each scenario, use Requirements Toolbox™.

To explore the test bench model, load the forward vehicle sensor fusion project.

openProject("FVSensorFusion"); open("ForwardVehicleSensorFusionTestRequirements.slreqx")

You can also open the file using the Requirements tab of the Requirements Manager app in Simulink.

The panel displays information about the test scenarios and the test requirements in textual and graphical format. To test a sensor fusion and tracking algorithm, use these scenario files.

scenario_LFACC_01_Curve_DecelTarget— Curved road scenario with a decelerating lead vehicle in the ego lane.scenario_LFACC_02_Curve_AutoRetarget— Curved road scenario with changing lead vehicles in the ego lane. This scenario tests the ability of the ego vehicle to retarget to a new lead vehicle while driving along a curve.scenario_LFACC_03_Curve_StopnGo— Curved road scenario with a lead vehicle slowing down in the ego lane.scenario_LFACC_04_Curve_CutInOut— Curved road scenario with a lead vehicle cutting into the ego lane to overtake a slow-moving vehicle in the adjacent lane, and then cutting out of the ego lane.scenario_LFACC_05_Curve_CutInOut_TooClose— Curved road scenario with a lead car cutting aggressively into the ego lane.scenario_LFACC_06_Straight_StopandGoLeadCar— Straight road scenario with a lead vehicle that breaks down in the ego lane.scenario_FVSF_01_Curve_FourVehicles— Curved road scenario with a lead car cutting out of the ego lane to overtake a slow-moving car.scenario_FVSF_02_Straight_FourVehicles— Straight road scenario where non-ego vehicles vary their velocity.scenario_FVSF_03_Curve_SixVehicles— Curved road scenario where the ego car varies its velocity.scenario_FVSF_04_Straight_ThreeVehicles— Straight road scenario with the ego car and two target vehicles. The lead vehicle travels in the ego lane and the other target vehicle travels in the adjacent right lane.scenario_FVSF_05_Straight_TwoVehicles— Straight road scenario with the ego vehicle and a target vehicle that travels in the adjacent lane to the right of the ego lane.

These test scenarios have the same names as the scenarios used in the ForwardVehicleSensorFusionTestBench model.

Review Test Bench Model

This example reuses the ForwardVehicleSensorFusionTestBench model from the Forward Vehicle Sensor Fusion example.

Open the test bench model.

open_system("ForwardVehicleSensorFusionTestBench")

To configure the test bench model, use the helperSLForwardVehicleSensorFusionSetup script. Specify a test scenario as input to the setup script by using the scenarioFcnName input argument. The value for scenarioFcnName must be one of the scenario names specified in the test requirements.

Run the setup script.

helperSLForwardVehicleSensorFusionSetup(scenarioFcnName="scenario_LFACC_03_Curve_StopnGo")

You can now simulate the model and visualize the results. For more details on the simulation and analysis of the simulation results, see the Forward Vehicle Sensor Fusion example.

This example focuses on automating the simulation runs to test the sensor fusion and tracking algorithm on different driving scenarios by using Simulink Test. The Evaluate Tracker Metrics subsystem integrates the component-level metric evaluations with Simulink Test by using the Check Static Upper Bound block.

Open the Evaluate Tracker Metrics subsystem.

open_system("ForwardVehicleSensorFusionTestBench/Evaluate Tracker Metrics")

The Evaluate Tracker Metrics subsystem outputs the generalized optimal subpattern assignment (GOSPA) metric and its components.

The Filter Within Coverage block removes the actors that are outside the coverage area of the sensors from the ground truth information. As shown in this figure, the block removes the purple and yellow vehicles from the truth list as these vehicles are not within the coverage areas of both the sensors. The GOSPA Metric block uses the filtered actors to compute the GOSPA metric and its components. The model logs the output results from the Evaluate Tracker Metrics subsystem to the base workspace variable logsout. You can verify the performance of the sensor fusion and tracking algorithm by validating and plotting the GOSPA metrics computed during the simulation. For more details on GOSPA metrics, see trackGOSPAMetric (Sensor Fusion and Tracking Toolbox).

Disable Runtime Visualizations

The test bench model opens an Unreal Engine simulation window for visualizing the scenario. You do not require this window while running automated tests. Configure the Simulation 3D Scene Configuration block to run the Unreal Engine in headless mode, where the 3D simulation window is disabled.

blk = ['ForwardVehicleSensorFusionTestBench/Sensors and Environment/', ... 'Simulation 3D Scene Configuration']; set_param(blk, EnableWindow="off")

Automate Testing

Open the ForwardVehicleSensorFusionTests.mldatx test file in the Test Manager. The Test Manager is configured to automate the testing of the sensor fusion and tracking algorithm.

sltestmgr

testFile = sltest.testmanager.load("ForwardVehicleSensorFusionTests.mldatx");

The test cases in the Test Manager are linked to the test requirements in the Requirements Editor. Each test case uses the POST-LOAD callback to run the setup script with appropriate inputs. After simulating the test case, the Test Manager invokes the helperPlotForwardVehicleSensorFusionResults function from the CLEANUP callback to generate the plots for GOSPA and its components from the Evaluate Tracker Metrics subsystem. For more information about these plots, see the Forward Vehicle Sensor Fusion example.

Run and Explore Results for Single Test Scenario

Test the system-level model on the scenario_LFACC_03_Curve_StopnGo scenario.

testSuite = getTestSuiteByName(testFile,"Test Scenarios"); testCase = getTestCaseByName(testSuite,"scenario_LFACC_03_Curve_StopnGo"); resultObj = run(testCase);

Generate the test reports obtained after the simulation.

sltest.testmanager.report(resultObj, "Report.pdf", ... Title="Forward Vehicle Sensor Fusion", ... IncludeMATLABFigures=true,IncludeErrorMessages=true, ... IncludeTestResults=false,LaunchReport=true);

Examine Report.pdf. The Test environment section shows the platform on which the test is run and the MATLAB version used for testing. The Summary section shows the outcome of the test and the duration of the simulation in seconds. The Results section shows pass or fail results based on the assessment criteria, and displays the plots logged from the helperPlotForwardVehicleSensorFusionResults function.

Run and Explore Results for All Test Scenarios

Run a simulation of the system for all the tests by using the run(testFile) command. Alternatively, you can click Play in the Test Manager app.

View the results in the Results and Artifacts tab of the Test Manager. For each test case, the Check Static Upper Bound blocks in the model are associated with the Test Manager. This association enables you to visualize the overall pass or fail results.

You can find the generated report in your current working directory. This report contains a detailed summary of the pass or fail statuses and plots for each test case.

Verify Test Status in Requirements Editor

Open the Requirements Editor and select Display. Then, select Verification Status to see a verification status summary for each requirement. Green and red bars indicate the pass or fail status, respectively, for each simulation test result.

Automate Testing with Generated Code

The ForwardVehicleSensorFusionTestBench model enables regression testing of the Forward Vehicle Sensor Fusion component through software-in-the-loop (SIL) verification, and enables you to identify any issues in this component. This workflow enables you to verify that the generated code produces expected results that match the system-level requirements throughout the simulation.

Set the Forward Vehicle Sensor Fusion component to run in SIL mode.

model = "ForwardVehicleSensorFusionTestBench/Forward Vehicle Sensor Fusion"; set_param(model,SimulationMode="Software-in-the-loop");

Simulate the system for all test scenarios using the run(testFile) command. After the tests are complete, review the plots and results in the generated report.

Capture Coverage Results

If you have a Simulink Coverage™ license, you can get the code coverage analysis for the generated code to measure the testing completeness. You can use the coverage data to find gaps in testing, missing requirements, or unintended functionality. You can visualize the coverage results for individual test cases, as well as aggregated coverage results.

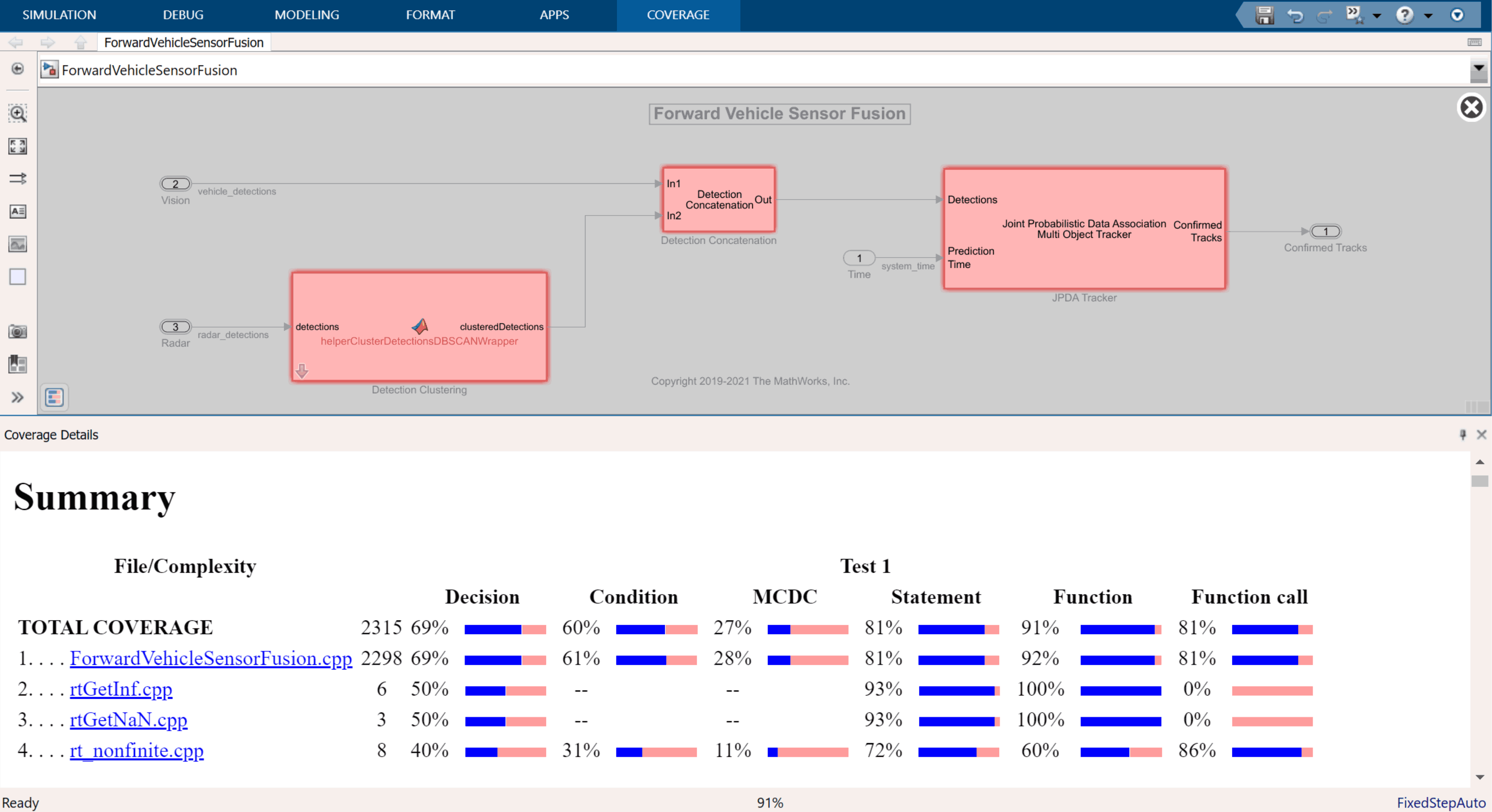

Select ForwardVehicleSensorFusion in the AGGREGATED COVERAGE RESULTS section of the test manager to view a detailed report of the coverage results.

Automate Testing in Parallel

If you have a Parallel Computing Toolbox™ license, you can configure the test manager to execute tests in parallel using a parallel pool. To run the tests in parallel, disable the runtime visualizations and save the models using save_system("ForwardVehicleSensorFusion") and save_system("ForwardVehicleSensorFusionTestBench"). Test Manager uses the default Parallel Computing Toolbox cluster, and executes tests on only the local machine. Running tests in parallel speeds up execution and decreases the amount of time required for testing. For more information on how to configure tests in parallel using the Test Manager, see Run Tests Using Parallel Execution (Simulink Test).

See Also

Topics

- Forward Vehicle Sensor Fusion

- Integrate and Verify C++ Code of Sensor Fusion Algorithm in Simulink

- Automate Real-Time Testing for Forward Vehicle Sensor Fusion

- Automate PIL Testing for Forward Vehicle Sensor Fusion

- Automate Testing for Highway Lane Following Controls and Sensor Fusion

- Automate Real-Time Testing of Highway Lane Following Controller Using ASAM XIL

- Automate Testing for Lane Marker Detector

- Automate Testing for Vision Vehicle Detector

- Highway Lane Following