Detect Object Collisions

You can use collision detection to model physical constraints of objects in the real world accurately, to avoid having two objects in the same place at the same time. You can use collision detection node outputs to:

Change the state of other virtual world nodes.

Apply MATLAB® algorithms to collision data.

Drive Simulink® models.

Set Up Collision Detection

To set up collision detection, define collision (pick) sensors that detect when they collide with targeted surrounding scene objects. The virtual world sensors resemble real-world sensors, such as ultrasonic, lidar, and touch sensors. The Simulink 3D Animation™ sensors are based on X3D sensors (also supported for VRML), as described in the X3D picking component specification. For descriptions of pick sensor output properties that you can access with VR Source and VR Sink blocks, see Use Collision Detection Data in Models.

PointPickSensor— Point clouds that detect which of the points are inside colliding geometriesLinePickSensor— Ray fans or other sets of lines that detect the distance to the colliding geometriesPrimitivePickSensor— Primitive geometries (such as a cone, sphere, or box) that detect colliding geometries

To add a collision detection sensor, use these general steps.

In the 3D World Editor tree structure pane, select the

childrennode of theTransformnode to which you want to add a pick sensor.To create the picking geometry to use with the sensor, add a

geometrynode. Select Nodes > Add > Geometry and select a geometry appropriate to the type of pick sensor (for example, Point Set).Add a pick sensor node by selecting Nodes > Add > Pick Sensor Node.

In the sensor node, right-click the

pickingGeometryproperty and select USE. Specify thegeometrynode that you created for the sensor.Also in the sensor node, right-click the

pickingTargetproperty and select USE. Specify the target objects for which you want the sensor to detect collisions.Instead of specifying the picking geometry with a USE, you can define the picking geometry directly. However, the directly defined geometry is invisible.

Optionally, change default property values or specify other values for sensor properties. For information about the

intersectionType, see Sensor Collisions with Multiple Object Pick Targets. For descriptions of output properties that you can access with a VR Source block, see Use Collision Detection Data in Models.

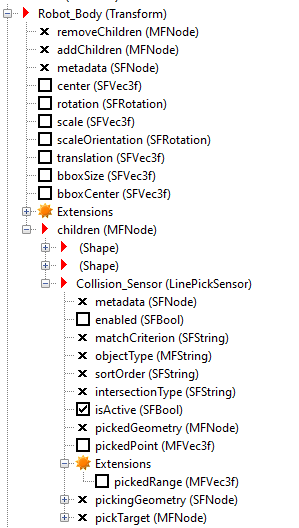

The

Robot_Bodynode has theLine_Setnode as one of its children. TheLine_Setnode defines the picking geometry for the sensor.The

Collision_Sensordefines the collision detection sensor for the robot. The sensor nodepickingGeometryspecifies to use theLine_Setnode as the picking geometry and theWalls_Obstaclesnode as the targets for collision detection.

Sensor Collisions with Multiple Object Pick Targets

To control how a pick sensor behaves when it collides with a pick target geometry that

consists of multiple objects, use the intersectionType property.

Possible values are:

GEOMETRY– The sensor collides with union of individual bounding boxes of all objects defined in thepickTargetfield. In general, this setting produces more exact results.BOUNDS– (Default) The sensor collides with one large bounding box construed around all objects defined in thepickTargetfield.

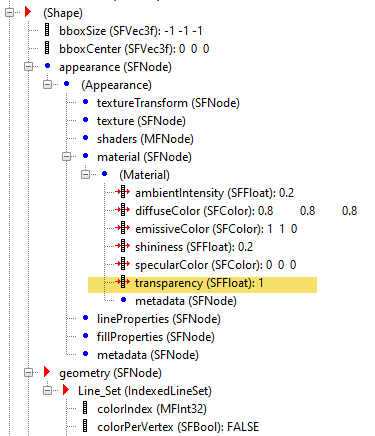

Make Picking Geometry Transparent

You can make the picking geometry used for a pick sensor invisible in the virtual

world. For the picking geometry, in its Material node, set the

Transparency property to 1. For example, in the

Materials virtual world node, change the

Transparency property to 1.

Avoid Impending Collisions

To avoid an impending collision (before the collision actually occurs), you can use

the pickedRange output property for a

LinePickSensor. As part of the line set picking geometry, define one or

more long lines that reflect your desired amount of advance notice of an impending

collision. You can make those lines transparent. Then create logic based on the

pickedRange value.

Use Collision Detection Data in Models

The isActive output property of a sensor becomes

TRUE when a collision occurs. To associate a model with the virtual

reality scene, you can use a VR Source block to read the sensor

isActive property and the current position of the object for which the

sensor is defined. You can use a VR Sink block to define the behavior of the

virtual world object, such as its position, rotation, or color.

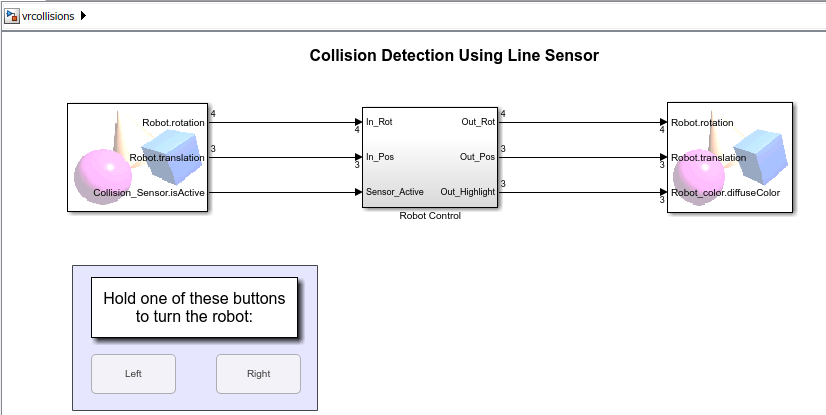

For example, the VR Source block in a Simulink model gets data from the associated virtual world.

In the model, select the VR Source block, and then in the Simulink 3D Animation Viewer, select Simulation > Block parameters. This image shows some of the key selected properties.

For the LinePickSensor

PointPickSensor, and PrimitivePickSensor, you can

select these output properties for a VR Source block:

enabled– Enables node operation.Note

The enabled property is the only property that you can select with a VR Sink block.

isActive– Indicates when the intersecting object is picked by the picking geometry.pickedPoint– Displays the points on the surface of the underlyingPickGeometrythat are picked (in local coordinate system).pickedRange– Indicates range readings from the picking. For details, see Avoid Impending Collisions.

For a PointPickSensor, you can select the enabled,

isActive, and pickedPoint outputs. For the

PrimitivePickSensor, you can select the enabled and

isActive outputs.

The Robot Control subsystem block includes the logic to change the

color and position of the robot.

Based on the Robot Control subsystem output, the VR

Sink block updates the virtual world to reflect the color and position of the

robot.

Tip

Consider adjusting the sample time for blocks for additional precision for collision detection.

Use Collision Detection Data in Virtual Worlds

You can use collision detection to manipulate virtual world objects, independently of a Simulink model or a virtual world object in MATLAB.

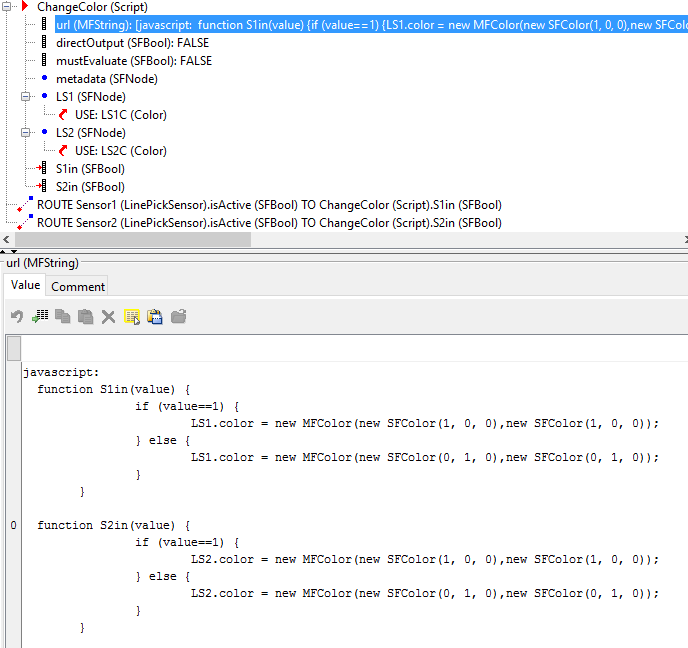

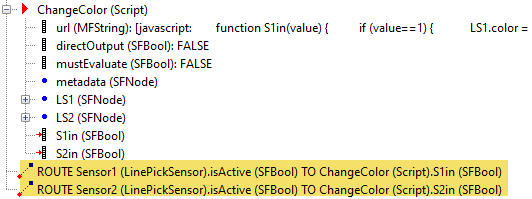

The VRML code includes ROUTE nodes for each of the pick

sensors.

The ROUTE nodes use logic defined in a Script node

called ChangeColor.