Getting Started with Point Clouds Using Deep Learning

Deep learning can automatically process point clouds for a wide range of 3-D imaging applications. Point clouds typically come from 3-D scanners, such as a lidar or Kinect® devices. They have applications in robot navigation and perception, depth estimation, stereo vision, surveillance, scene classification, and in advanced driver assistance systems (ADAS).

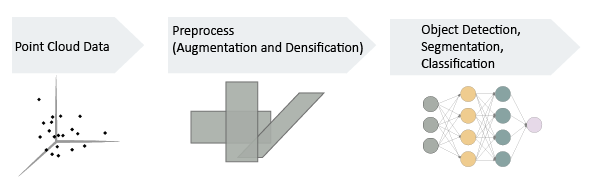

In general, the first steps for using point cloud data in a deep learning workflow are:

Import point cloud data. Use a datastore to hold the large amount of data.

Optionally augment the data.

Encode the point cloud to an image-like format consistent with MATLAB®-based deep learning workflows.

You can apply the same deep learning approaches to classification, object detection, and semantic segmentation tasks using point cloud data as you would using regular gridded image data. However, you must first encode the unordered, irregularly gridded structure of point cloud and lidar data into a regular gridded form. For certain tasks, such as semantic segmentation, some postprocessing on the output of image-based networks is required in order to restore a point cloud structure.

Import Point Cloud Data

In order to work with point cloud data in deep learning workflows, first, read the raw data. Consider using a datastore for working with and representing collections of data that are too large to fit in memory at one time. Because deep learning often requires large amounts of data, datastores are an important part of the deep learning workflow in MATLAB. For more details about datastores, see Datastores for Deep Learning (Deep Learning Toolbox).

The Import Point Cloud Data for Deep Learning example imports a large point cloud data set, and then configures and loads a datastore.

Augment Data

The accuracy and success of a deep learning model depends on large annotated datasets. Using augmentation to produce larger datasets helps reduce overfitting. Overfitting occurs when a classification system mistakes noise in the data for a signal. By adding additional noise, augmentation helps the model balance the data points and minimize the errors. Augmentation can also add robustness to data transformations which may not be well represented in the original training data, (for example rotation, reflection, translations). And by reducing overfitting, augmentation can often lead to better results in the inference stage, which makes predictions based on what the deep learning neural network has been trained to detect.

The Augment Point Cloud Data for Deep Learning example setups a basic randomized data augmentation pipeline that works with point cloud data.

Encode Point Cloud Data to Image-like Format

To use point clouds for training with MATLAB-based deep learning workflows, the data must be encoded into a dense, image-like format. Densification or voxelization is the process of transforming an irregular, ungridded form of point cloud data to a dense, image-like form.

The Encode Point Cloud Data for Deep Learning example transforms point cloud data into a dense, gridded structure.

Train a Deep Learning Classification Network with Encoded Point Cloud Data

Once you have encoded point cloud data into a dense form, you can use the data for an image-based classification, object detection, or semantic segmentation task using standard deep learning approaches.

The Train Classification Network to Classify Object in 3-D Point Cloud example preprocesses point cloud data into a voxelized encoding and then uses the image-like data with a simple 3-D convolutional neural network to perform object classification.

See Also

pcbin | pcread | bboxwarp | bboxcrop | bboxresize

Topics

- Lidar 3-D Object Detection Using PointPillars Deep Learning (Lidar Toolbox)

- Lidar Point Cloud Semantic Segmentation Using PointSeg Deep Learning Network (Deep Learning Toolbox)

- Segmentation, Detection, and Labeling (Lidar Toolbox)