Battery State Estimation Using Deep Learning

Carlos Vidal, McMaster University

Phil Kollmeyer, McMaster University

Overview

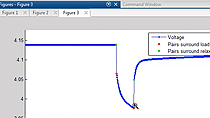

A feed forward deep neural network is trained with voltage, current, and temperature inputs and state of charge outputs to and from a lithium ion battery cell. Operating conditions include different current levels and different temperatures. Achieved estimation accuracy was around 1% MAE. The feedforward neural network script and accompanying data can be downloaded here.

About the Presenters

Javier Gazzarri is a Principal Application Engineer at MathWorks in Novi, Michigan, USA, focusing on the use of physical modeling tools as an integral part of Model Based Design. Much of his work gravitates around battery modeling, from cell-level to system-level, parameter estimation for model correlation, battery management system design, balancing, aging, and state-of-charge estimation. Before joining MathWorks, Javier worked on fuel cell modeling at the National Research Council of Canada in Vancouver, British Columbia. He has a Bachelor’s degree in Mechanical Engineering from the University of Buenos Aires (Argentina), a MASc degree (Inverse Methods), and a PhD degree (Solid Oxide Fuel Cells) both from the University of British Columbia (Canada).

Carlos Vidal received his B.S. in Electrical Engineering in 2005 from Federal University of Campina Grande (UFCG), MBA with a concentration in Project Management from Getulio Vargas Foundation (FGV) in 2007, his M. A Sc. in Civil and Environmental Engineering from Federal University of Pernambuco (UFPE) in 2015, Brazil. In 2020 he obtained his Ph.D. in Mechanical Engineering from McMaster University, Hamilton, ON, Canada. He is currently working as Postdoctoral Fellow at the McMaster Automotive Research Centre (MARC). Before joining McMaster, he has been gathering industry experience from several engineering and management positions, including developing a 48V battery system for a Mild-Hybrid Vehicle prototype. His main research areas include artificial intelligence, modelling, and energy storage applied to electrified vehicles and battery management systems.

Phillip Kollmeyer is a Senior Principal Research Engineer at McMaster University in Hamilton, Ontario, Canada. Phillip is the lead engineer for the forty-five member research team for the Car of the Future project sponsored by Fiat Chrysler Automobiles and Canada’s Natural Sciences and Engineering Research Council (NSERC). His research focuses on state estimation, thermal management, modeling, and aging of electrochemical energy storage systems, and the application of neural networks for modeling and state estimation. Phil has a Bachelor’s degree, MASc degree (electric vehicle drivetrains), and PhD degree (hybrid energy storage and electric trucks) all from the University of Wisconsin-Madison (USA).

Recorded: 11 Dec 2020