Unorganized to Organized Conversion of Point Clouds Using Spherical Projection

This example shows how to convert unorganized point clouds to organized format using spherical projection.

Introduction

A 3-D lidar point cloud is usually represented as a set of Cartesian coordinates (x, y, z). A point cloud also contains additional information such as intensity, and RGB values. Unlike the distribution of image pixels, the distribution of a lidar point cloud is usually sparse and irregular. Processing such sparse data is inefficient. To obtain a compact representation, you project lidar point clouds onto a sphere to create a dense, grid-based representation known as an organized representation [1]. To learn more about the differences between organized and unorganized point clouds, see What Are Organized and Unorganized Point Clouds? Some of the ground plane extraction and key point detector methods require organized point clouds. Additionally, you must convert your point cloud to organized format if you want to use most deep learning segmentation networks, including SqueezeSegV1, SqueezeSegV2, RangeNet++ [2], and SalsaNext [3]. For an example showing how to use deep learning with an organized point cloud see Lidar Point Cloud Semantic Segmentation Using SqueezeSegV2 Deep Learning Network example.

Lidar Sensor Parameters

To convert an unorganized point cloud to organized format using spherical projection, you must specify the parameters of the lidar sensor used to create the point cloud. Refer to the data sheet of your sensor to know more about the sensor parameters. You can specify the following parameters.

Beam configuration — '

uniform' or 'gradient'. Specify 'uniform' if the beams have equal spacing. Specify 'gradient' if the beams at the horizon are tightly packed, and those toward the top and bottom of the sensor field of view are more spaced out.Vertical resolution — Number of channels in the vertical direction, that is, the number of lasers. Typical values are 32, and 64.

Vertical beam angles — Angular position of each vertical channel. You must specify this parameter when beam configuration is '

gradient'.Upward vertical field of view — Field of view in the vertical direction above the horizon (in degrees).

Downward vertical field of view — Field of view in the vertical direction below the horizon (in degrees).

Horizontal resolution — Number of channels in horizontal direction. Typical values are 512, and 1024.

Horizontal angular resolution — The angular resolution between each channel along horizontal direction. You must specify this parameter when horizontal resolution is not mentioned in the datasheet.

Horizontal field of view — Field of view covered in the horizontal direction (in degrees). In most cases, this value is 360 degrees.

You can specify most the above sensor parameters using a lidarParameters object

Ouster OS-1 Sensor

Read the point cloud using the pcread function.

ptCloud = pcread('ousterLidarDrivingData.pcd');Check the size of the sample point cloud. If the point cloud coordinates are in the form, M-by-N-by-3, it is an organized point cloud.

isOrganized = size(ptCloud.Location,3) == 3;

Remove the invalid points and convert the point cloud to unorganized format using removeInvalidPoints function.

if isOrganized ptCloudUnOrg = removeInvalidPoints(ptCloud); end

The point cloud data was collected from an Ouster OS1 Gen1 sensor. Specify the sensor parameters using lidarParameters function.

hResolution = 1024;

params = lidarParameters("OS1Gen1-64",hResolution);Convert the unorganized point cloud to organized format using the pcorganize function.

ptCloudOrg = pcorganize(ptCloudUnOrg,params);

Display the intensity channel of the original and reconstructed organized point clouds.

figure

montage({uint8(ptCloud.Intensity),uint8(ptCloudOrg.Intensity)});

title("Intensity Channel of Original Point Cloud(Top) vs. Reconstructed Organized Point Cloud(Bottom)")

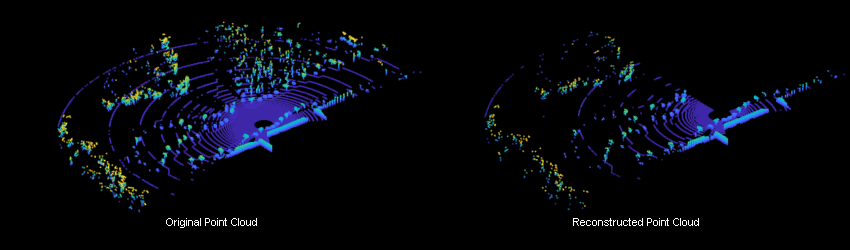

Display both the original organized point cloud and the reconstructed organized point cloud using the helperShowUnorgAndOrgPair helper function, attached to this example as a supporting file.

display1 = helperShowUnorgAndOrgPair(); zoomFactor = 3.5; display1.plotLidarScan(ptCloud,ptCloudOrg,zoomFactor);

Velodyne Sensor

Read the point cloud using the pcread function.

ptCloudUnOrg = pcread('HDL64LidarData.pcd');The point cloud data is collected from the Velodyne HDL-64 sensor. Specify the sensor parameters using lidarParameters function.

hResolution = 1024;

params = lidarParameters("HDL64E",hResolution);Convert the unorganized point cloud to organized format using the pcorganize function.

ptCloudOrg = pcorganize(ptCloudUnOrg,params);

Display the intensity channel of the reconstructed organized point cloud. Resize the image for better visualization.

intensityChannel = ptCloudOrg.Intensity;

intensityChannel = imresize(intensityChannel,'Scale',[3 1]);

figure

imshow(intensityChannel);

Display both the original organized point cloud and the reconstructed organized point cloud using the helperShowUnorgAndOrgPair helper function, attached to this example as a supporting file.

display2 = helperShowUnorgAndOrgPair(); zoomFactor = 2.5; display2.plotLidarScan(ptCloudUnOrg,ptCloudOrg,zoomFactor);

Configure the Sensor Parameters

For any given point cloud, you can specify the sensor parameters like vertical and horizontal resolution, vertical and horizontal field-of-view when converting to organized format.

Read the point cloud using the pcread function.

ptCloudUnOrg = pcread('HDL64LidarData.pcd');The point cloud data is collected from the Velodyne HDL-64 sensor. You can configure the sensor by specifying different parameters.

% Define vertical and horizontal resolution. vResolution = 32; hResolution = 512; % Define vertical and horizontal field-of-view. vFoVUp = 2; vFoVDown = -24.9; vFoV = [vFoVUp vFoVDown]; hFoV = 270;

Specify the sensor parameters using lidarParameters function.

params = lidarParameters(vResolution,vFoV,hResolution,"HorizontalFoV",hFoV);Convert the unorganized point cloud to organized format using the pcorganize function.

ptCloudOrg = pcorganize(ptCloudUnOrg,params);

Display the intensity channel of the reconstructed organized point cloud. Resize the image for better visualization.

intensityChannel = ptCloudOrg.Intensity;

intensityChannel = imresize(intensityChannel,'Scale',[3 1]);

figure

imshow(intensityChannel);

Display both the original organized point cloud and the reconstructed organized point cloud using the helperShowUnorgAndOrgPair helper function, attached to this example as a supporting file.

display3 = helperShowUnorgAndOrgPair(); display3.plotLidarScan(ptCloudUnOrg,ptCloudOrg,zoomFactor);

Pandar Sensor

Read the point cloud using the pcread function. The point cloud data has been collected using a Pandar-64 sensor [4].

ptCloudUnOrg = pcread('Pandar64LidarData.pcd');Specify these parameters of the Pandar-64 sensor. For more information, refer to the sensor user manual available in the Downloads section of the Hesai® website.

vResolution = 64; hAngResolution = 0.2;

The beam configuration is 'gradient', meaning that the beam spacing is not uniform. Specify the beam angle values along the vertical direction.

vbeamAngles = [15.0000 11.0000 8.0000 5.0000 3.0000 2.0000 1.8333 1.6667 1.5000 1.3333 1.1667 1.0000 0.8333 0.6667 ... 0.5000 0.3333 0.1667 0 -0.1667 -0.3333 -0.5000 -0.6667 -0.8333 -1.0000 -1.1667 -1.3333 -1.5000 -1.6667 ... -1.8333 -2.0000 -2.1667 -2.3333 -2.5000 -2.6667 -2.8333 -3.0000 -3.1667 -3.3333 -3.5000 -3.6667 -3.8333 -4.0000 ... -4.1667 -4.3333 -4.5000 -4.6667 -4.8333 -5.0000 -5.1667 -5.3333 -5.5000 -5.6667 -5.8333 -6.0000 -7.0000 -8.0000 ... -9.0000 -10.0000 -11.0000 -12.0000 -13.0000 -14.0000 -19.0000 -25.0000];

Calculate the horizontal resolution.

hResolution = round(360/hAngResolution);

Define the sensor parameters using lidarParameters function.

params = lidarParameters(vbeamAngles,hResolution);

Convert the unorganized point cloud to organized format using the pcorganize function.

ptCloudOrg = pcorganize(ptCloudUnOrg,params);

Display the intensity channel of the reconstructed organized point cloud. Resize the image and use histeq for better visualization.

intensityChannel = ptCloudOrg.Intensity;

intensityChannel = imresize(intensityChannel,'Scale',[3 1]);

figure

histeq(intensityChannel./max(intensityChannel(:)));

Display both the original organized point cloud and the reconstructed organized point cloud using the helperShowUnorgAndOrgPair helper function, attached to this example as a supporting file.

display4 = helperShowUnorgAndOrgPair(); zoomFactor = 4; display4.plotLidarScan(ptCloudUnOrg,ptCloudOrg,zoomFactor);

References

[1] Wu, Bichen, Alvin Wan, Xiangyu Yue, and Kurt Keutzer. "SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road-Object Segmentation from 3D LiDAR Point Cloud." In 2018 IEEE International Conference on Robotics and Automation (ICRA), 1887-93. Brisbane, QLD: IEEE, 2018. https://doi.org/10.1109/ICRA.2018.8462926.

[2] Milioto, Andres, Ignacio Vizzo, Jens Behley, and Cyrill Stachniss. "RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation." In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 4213-20. Macau, China: IEEE, 2019. https://doi.org/10.1109/IROS40897.2019.8967762.

[3] Cortinhal, Tiago, George Tzelepis, and Eren Erdal Aksoy. "SalsaNext: Fast, Uncertainty-Aware Semantic Segmentation of LiDAR Point Clouds for Autonomous Driving." ArXiv:2003.03653 [Cs], July 9, 2020. https://arxiv.org/abs/2003.03653.

[4] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

[5] Xiao, Pengchuan, Zhenlei Shao, Steven Hao, et al. “PandaSet: Advanced Sensor Suite Dataset for Autonomous Driving.” 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), IEEE, September 19, 2021, 3095–101. https://doi.org/10.1109/ITSC48978.2021.9565009.