Applying AI to Enable Autonomy in Robotics Using MATLAB

The AI applications in robotics greatly expanded in recent years to include voice command, object identification, pose estimation, and motion planning, to name a few. AI-enabled robots continue to grow for manufacturing facilities, power plants, warehouses, and other industrial sites. Warehouse bin-picking is a good example. Deep learning and reinforcement learning enable robots to handle various objects with minimal help from humans, reducing workplace injuries due to repetitive motions.

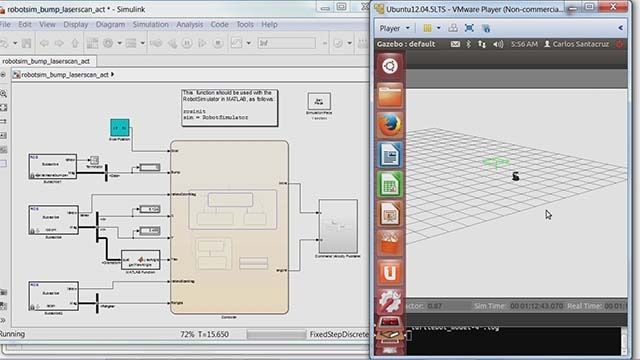

Learn how to empower your robots using AI for perception and motion control in autonomous robotics applications. MATLAB® and Simulink® provide a powerful platform for successful AI adoption in robotics and autonomous systems. You can use the same development platform to design, test, and deploy your AI applications in intelligent bin-picking collaborative robots (cobots), autonomous mobile robots (AMRs), UAVs, and other robotics systems. This reduces development time as well as time-to-market.

Gain insights into:

- Reducing manual effort with automatic data labeling

- Detecting and classifying objects using deep learning for robotics applications

- Motion planning using deep learning

- Controlling robot motion using reinforcement learning

- Deploying deep learning algorithms as CUDA-optimized ROS nodes

Published: 5 May 2023