Deploying a Deep Learning-Based State-of-Charge (SOC) Estimation Algorithm to NXP S32K3 Microcontrollers

Overview

Battery management systems (BMS) ensure safe and efficient operation of battery packs in electric vehicles, grid power storage systems, and other battery-driven equipment. One major task of the BMS is estimating state of charge (SOC). Traditional methods for SoC estimation require accurate battery models that are difficult to characterize. An alternative to this is to create data driven models of the cell using AI methods such as neural networks.

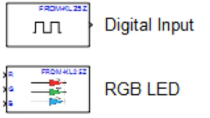

This webinar shows how to use Deep Learning Toolbox, Simulink, and Embedded Coder to generate C code for AI algorithms for battery SoC estimation and deploy them to an NXP S32K3 microcontroller. Based on previous work done by McMaster University on Deep Learning workflows for battery state estimation, we use Embedded Coder to generate optimized C code from a neural network imported from TensorFlow and run it in processor-in-the-loop mode on an NXP S32K3 microcontroller. The code generation workflow will feature the use of the NXP Model-Based Design Toolbox, which provides an integrated development environment and toolchain for configuring and generating all the necessary software to execute complex applications on NXP MCUs.

Highlights

- Neural Network estimation for battery state-of-charge (SOC)

- Integration of deep learning-based SOC algorithm into a Simulink model

- Generating optimized, production ready code with Embedded Coder

- Deploying code to an NXP S32K3 microcontroller using the NXP Model-Based Design Toolbox

About the Presenters

Javier Gazzarri, MathWorks

Javier Gazzarri has worked as an application engineer at MathWorks for 10 years, focusing on the use of simulation tools as an integral part of model-based design. Before joining MathWorks, Javier worked on fuel cell modeling at the National Research Council of Canada in Vancouver, British Columbia. He has a bachelor’s degree in Mechanical Engineering from the University of Buenos Aires (Argentina), a master’s degree and a PhD degree) both from the University of British Columbia (Canada).

Marius Andrei, NXP

Marius Andrei joined NXP in 2017 where contributes to Model-Based Design Software solutions development for NXP Automotive Products. Marius graduated from the Politehnica University of Bucharest in Romania with a master's degree in Advanced Computer Architectures.

Recorded: 18 Nov 2021