FPGA, ASIC, and SoC Development with MATLAB and Simulink

Watch an overview of ways your projects can benefit by connecting MATLAB® and Simulink® to FPGA, ASIC, and SoC development. Learn about the variety of ways that customers can improve their productivity or even target FPGA hardware for the first time.

Highlights include:

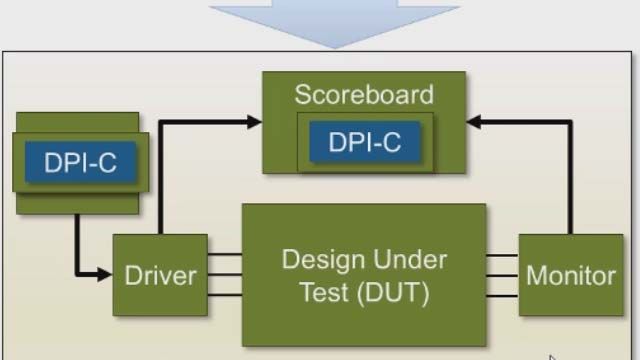

- Challenges of FPGA, SoC, and ASIC design verification

- Importance of collaboration between algorithm developers and hardware design and verification teams

- Exploration of hardware and SoC architectures and how to automatically generate HDL code

- Algorithm-level hardware design IP for wireless, vision, radar, and AI applications

- Techniques to reuse MATLAB and Simulink to speed up RTL verification

Published: 13 Jul 2022