Generate Floating-Point HDL for FPGA and ASIC Hardware

Quantizing floating-point algorithms to fixed-point for efficient FPGA or ASIC implementation requires many steps and numerical considerations. Converging on the right balance between arithmetic precision and hardware resource usage is an iterative process between algorithm and hardware design. The process becomes more difficult when it requires a high-precision or high-dynamic range.

To simplify this process, HDL Coder™ can generate target-independent synthesizable VHDL® or Verilog® from single-, double-, or half-precision floating-point algorithms for FPGA or ASIC deployment. This overview shows how to generate floating-point FPGA and ASIC hardware, including:

- How to identify algorithms that might benefit from staying in floating-point

- What types of operations HDL Coder native floating-point code generation supports

- How to mix fixed- and floating-point implementation in the same design using Fixed-Point Designer™

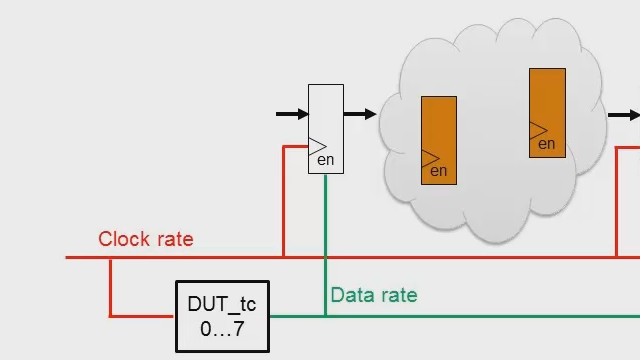

- How to control latency and sharing optimizations for native floating-point code generation to meet your FPGA or ASIC implementation goals

Published: 1 Nov 2016