Import Pretrained Deep Learning Networks into MATLAB

From the series: Perception

In this video, Neha Goel joins Connell D’Souza to import networks designed and trained in environments like TensorFlow and PyTorch into MATLAB® using Open Neural Network Exchange (ONNX). You can download ONNX models for popular pretrained deep learning networks from the ONNX Model Zoo.

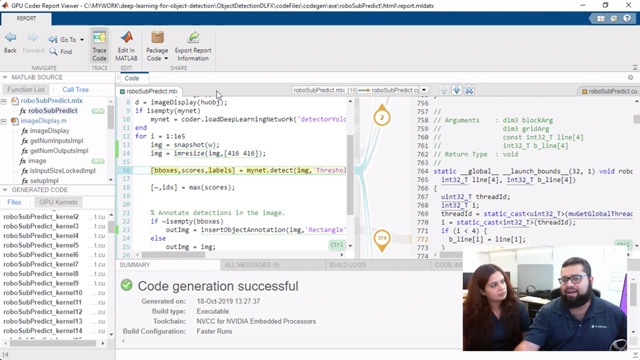

A TinyYOLOv2 ONNX is imported into MATLAB and Neha demonstrates how you can use the Deep Network Designer app to edit the model to prepare it for training. This pretrained model is then trained using transfer learning to identify the objects of interest.

Resources:

Published: 3 Jan 2020