detect

Syntax

Description

bboxes = detect(detector,I)I, using an

RTMDet object detector, detector. The detect

function returns the locations of detected objects in the input image as a set of bounding

boxes.

Note

This functionality requires Deep Learning Toolbox™ and the Computer Vision Toolbox™ Model for RTMDet Object Detection. You can install the Computer Vision Toolbox Model for RTMDet Object Detection from Add-On Explorer. For more information about installing add-ons, see Get and Manage Add-Ons.

detectionResults = detect(detector,ds)ds.

[___] = detect(___,

detects objects within the rectangular region of interest roi)roi, in

addition to any combination of arguments from previous syntaxes.

[___] = detect(___,

specifies options using one or more name-value arguments. For example,

Name=Value)Threshold=0.5 specifies a detection threshold of 0.5.

Examples

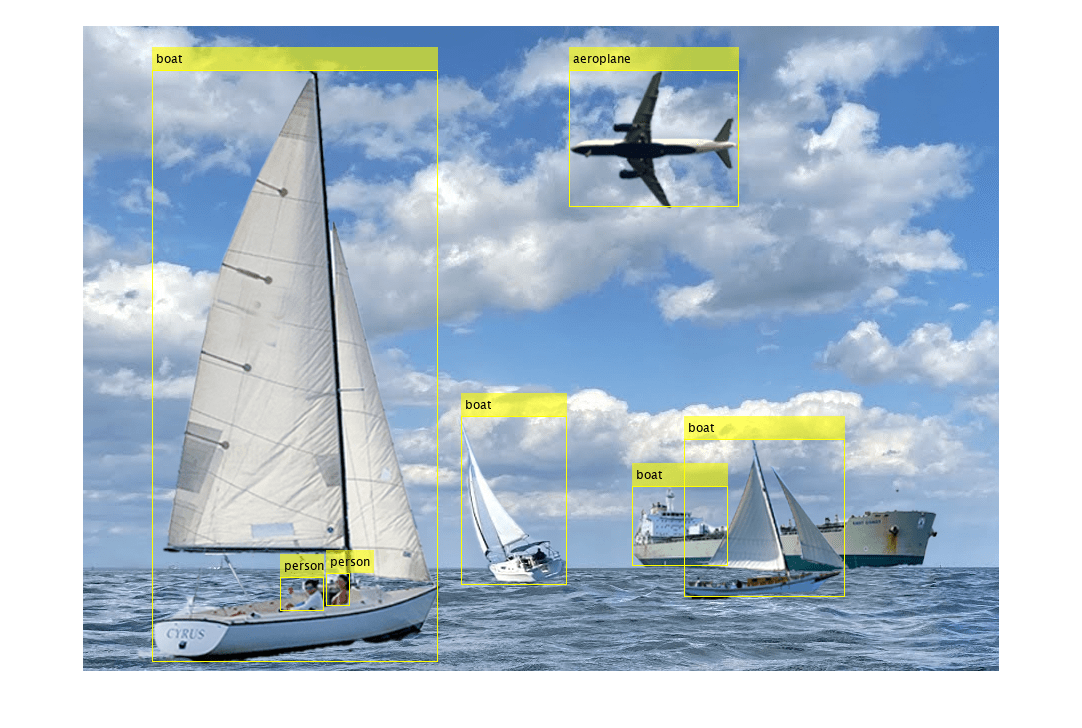

Specify the name of a pretrained RTMDet deep learning network.

modelname = "medium-network-coco";Create an RTMDet object detector by using the pretrained RTMDet network.

detector = rtmdetObjectDetector(modelname);

Read a test image into the workspace, and detect objects in it by using the pretrained RTMDet object detector with a Threshold value of 0.55.

img = imread("boats.png");

[bboxes,scores,labels] = detect(detector,img,Threshold=0.55);Display the bounding boxes and predicted class labels of the detected objects.

detectedImg = insertObjectAnnotation(img,"rectangle",bboxes,labels);

figure

imshow(detectedImg)

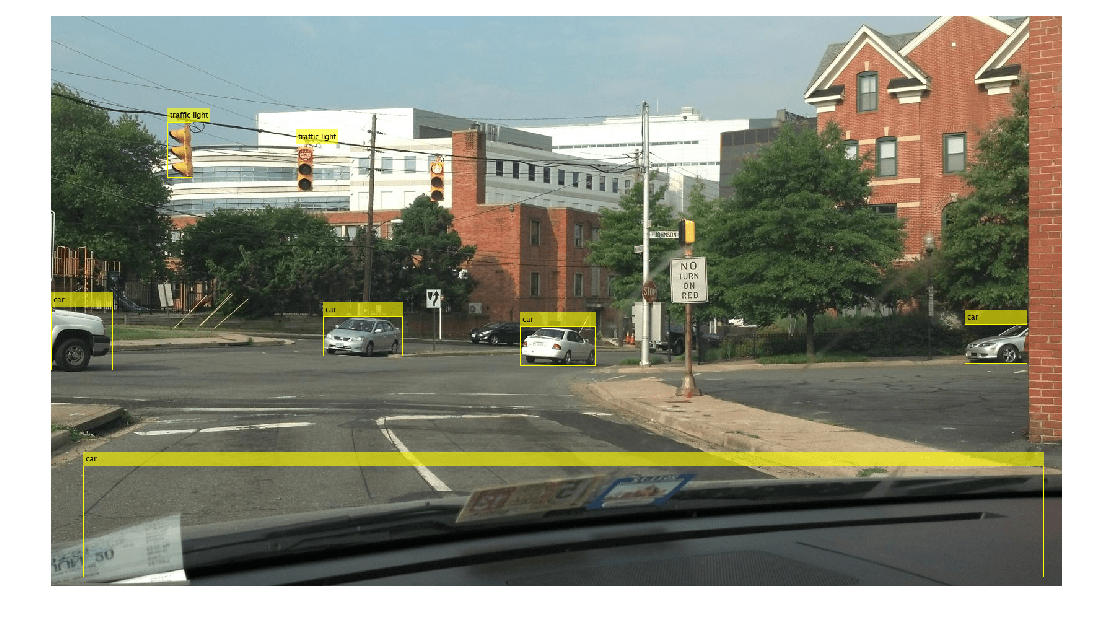

Load a pretrained RTMDet object detector.

detector = rtmdetObjectDetector("tiny-network-coco");Read the test datastore, and store it as an image datastore object.

location = fullfile(matlabroot,"toolbox","vision","visiondata","stopSignImages"); imds = imageDatastore(location);

Detect objects in the test datastore. Set the Threshold value to 0.6 and MiniBatchSize value to 16.

detectionResults = detect(detector,imds,Threshold=0.6,MiniBatchSize=16);

Read an image from the test data set, and extract the corresponding detection results.

num = 4;

I = readimage(imds,num);

bboxes = detectionResults.Boxes{num};

labels = detectionResults.Labels{num};

scores = detectionResults.Scores{num};Display the table of detection results.

results = table(bboxes,labels,scores)

results=7×3 table

bboxes labels scores

____________________________________ _____________ _______

189.3 171.7 40.215 90.083 traffic light 0.70336

1 470.09 99.577 103.39 car 0.65168

439.71 486.38 128.54 63.771 car 0.75719

758.23 502.11 122.35 63.529 car 0.71745

1476.4 498.05 100.52 63.998 car 0.6535

395.76 207.24 30.013 76.882 traffic light 0.67125

53.328 727.17 1550.7 180.05 car 0.61397

Display the bounding boxes and predicted class labels of the detected objects in the image selected from the test datastore.

detectedImg = insertObjectAnnotation(I,"rectangle",bboxes,labels);

figure

imshow(detectedImg)

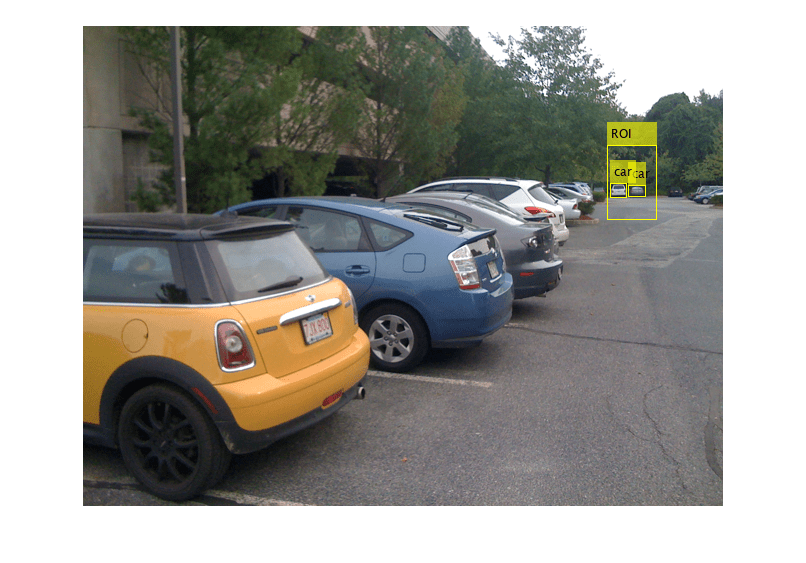

Load a pretrained RTMDet object detector.

detector = rtmdetObjectDetector("large-network-coco");Read a test image.

img = imread("parkinglot_left.png");Specify a rectangular region of interest (ROI) within the test image.

roiBox = [525 120 50 75];

Detect objects within the specified ROI.

[bboxes,scores,labels] = detect(detector,img,roiBox);

Display the bounding boxes and corresponding class labels of the detected objects within the ROI.

img = insertObjectAnnotation(img,"rectangle",roiBox,"ROI",AnnotationColor="yellow"); detectedImg = insertObjectAnnotation(img,"rectangle",bboxes,labels); figure imshow(detectedImg)

Input Arguments

RTMDet object detector, specified as an rtmdetObjectDetector object.

Test images, specified as a numeric array of size H-by-W-by-C or H-by-W-by-C-by-B. You must specify real and nonsparse grayscale or RGB images.

H — Height of the input images.

W — Width of the input images.

C — Number of channels. The channel size of each image must be equal to the input channel size of the network. For example, for grayscale images, C must be

1. For RGB color images, it must be3.B — Number of test images in the batch. The

detectfunction computes the object detection results for each test image in the batch.

When the test image size does not match the network input size, the detector resizes

the input image to the value of the InputSizedetector, unless you

specify AutoResize

as false.

The detector is sensitive to the range of the test image. Therefore, ensure that the

test image range is similar to the range of the images used to train the detector. For

example, if the detector was trained on uint8 images, rescale this

input image to the range [0, 255] by using the im2uint8 or rescale

function.

Data Types: uint8 | uint16 | int16 | double | single

Datastore of test images, specified as an ImageDatastore object, CombinedDatastore object,

or TransformedDatastore

object containing the full filenames of the test images. The images in the datastore

must be grayscale or RGB images.

Region of interest (ROI) to search, specified as a vector of form

[x

y

width

height]. The vector specifies the upper-left corner and size of a

region, in pixels. If the input data is a datastore, the detect

function applies the same ROI to every image.

Note

To specify the ROI to search, the AutoResize value must be true, enabling the

function to automatically resize the input test images to the network input

size.

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Example: detect(detector,I,Threshold=0.25) specifies a detection

threshold of 0.25.

Detection threshold, specified as a scalar in the range [0, 1].

The function removes detections that have scores less than this threshold value. To

reduce false positives, at the possible expense of missing some detections, increase

this value.

Strongest bounding box selection, specified as a numeric or logical

1 (true) or 0

(false).

true— Returns only the strongest bounding box for each object. Thedetectfunction calls theselectStrongestBboxMulticlassfunction, which uses nonmaximal suppression to eliminate overlapping bounding boxes based on their confidence scores.By default, the

detectfunction uses this call to theselectStrongestBboxMulticlassfunction.selectStrongestBboxMulticlass(bboxes,scores, ... RatioType="Union", ... OverlapThreshold=0.45);

false— Return all detected bounding boxes. You can write a custom function to eliminate overlapping bounding boxes.

Minimum region size containing an object, specified as a vector of the form

[height

width]. Units are in pixels. The minimum region size defines the

size of the smallest object that the trained network can detect. When you know the

minimum size, you can reduce computation time by setting MinSize to

that value.

Maximum region size, specified as a vector of the form [height width]. Units are in pixels. The maximum region size defines the size of the largest object that the trained network can detect.

By default, MaxSize is set to the height and width of the

input image I. To reduce computation time, set this value to the

known maximum region size in which to detect objects in the input test image.

Minimum batch size, specified as a positive integer. Adjust the

MiniBatchSize value to help process a large collection of

images. The detect function groups images into minibatches of the

specified size and processes them as a batch, which can improve computational

efficiency at the cost of increased memory demand. Decrease the minibatch size to use

less memory.

Automatic resizing of input images to preserve the aspect ratio, specified as a

numeric or logical 1 (true) or

0 (false). When AutoResize

is set to true, the detect function resizes

images to the nearest InputSizeAutoResize to

logical false or 0 when performing image

tiling-based inference.

Hardware resource on which to run the detector, specified as one of these values:

"auto"— Use a GPU if Parallel Computing Toolbox™ is installed and a supported GPU device is available. Otherwise, use the CPU."gpu"— Use the GPU. To use a GPU, you must have Parallel Computing Toolbox and a CUDA® enabled NVIDIA® GPU. If a suitable GPU is not available, the function returns an error. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox)."cpu"— Use the CPU.

Performance optimization, specified as one of these options:

"auto"— Automatically apply a number of compatible optimizations suitable for the input network and hardware resource."mex"— Compile and execute a MEX function. This option is available only when using a GPU. Using a GPU requires Parallel Computing Toolbox and a CUDA-enabled NVIDIA GPU. If Parallel Computing Toolbox or a suitable GPU is not available, then thedetectfunction returns an error. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox)."none"— Do not use acceleration.

Using the Acceleration options "auto" and

"mex" can offer performance benefits on subsequent calls with

compatible parameters, at the expense of an increased initial run time. Use

performance optimization when you plan to call the function multiple times using new

input data.

The "mex" option generates and executes a MEX function based on

the network and parameters used in the function call. You can have several MEX

functions associated with a single network at one time. Clearing the network variable

also clears any MEX functions associated with that network.

The "mex" option is available only for input data specified as

a numeric array, cell array of numeric arrays, table, or image datastore. No other

types of datastore support the "mex" option.

The "mex" option is available only when you are using a GPU.

You must also have a C/C++ compiler installed. For setup instructions, see Set Up Compiler (GPU Coder).

"mex" acceleration does not support all layers. For a list of

supported layers, see Supported Layers (GPU Coder).

Output Arguments

Locations of objects detected within the input image or images, returned as one of these options:

M-by-4 matrix — Returned when the input is a single test image. M is the number of bounding boxes detected in an image. Each row of the matrix is of the form [x y width height]. The x and y values specify the upper-left corner coordinates, and width and height specify the size, of the corresponding bounding box, in pixels.

B-by-1 cell array — Returned when the input is a batch of images, where B is the number of test images in the batch. Each cell in the array contains an M-by-4 matrix specifying the bounding boxes detected within the corresponding image.

Detection confidence scores for each bounding box in the range [0,

1], returned as one of these options:

M-by-1 numeric vector — Returned when the input is a single test image. M is the number of bounding boxes detected in the image.

B-by-1 cell array — Returned when the input is a batch of test images, where B is the number of test images in the batch. Each cell in the array contains an M-element row vector, where each element indicates the detection score for a bounding box in the corresponding image.

A higher score indicates higher confidence in the detection. The confidence score for each detection is a product of the corresponding objectness score and maximum class probability. The objectness score is the probability that the object in the bounding box belongs to a class in the image. The maximum class probability is the largest probability that a detected object in the bounding box belongs to a particular class.

Labels for bounding boxes, returned as one of these options:

M-by-1 categorical vector — Returned when the input is a single test image. M is the number of bounding boxes detected in the image.

B-by-1 cell array — Returned when the input is an array of test images. B is the number of test images in the batch. Each cell in the array contains an M-by-1 categorical vector containing the names of the object classes.

Detection results when the input is a datastore of test images, ds,

returned as a table with these columns:

bboxes | scores | labels |

|---|---|---|

Predicted bounding boxes, defined in spatial coordinates as an M-by-4 numeric matrix with rows of the form [x y w h], where:

| Class-specific confidence scores for each bounding box, returned as

an M-by-1 numeric vector with values in the range

| Predicted object labels assigned to bounding boxes, returned as an M-by-1 categorical vector. All categorical data returned by the datastore must contain the same categories. |

Extended Capabilities

C/C++ Code Generation

Generate C and C++ code using MATLAB® Coder™.

GPU Code Generation

Generate CUDA® code for NVIDIA® GPUs using GPU Coder™.

Version History

Introduced in R2024b

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Seleccione un país/idioma

Seleccione un país/idioma para obtener contenido traducido, si está disponible, y ver eventos y ofertas de productos y servicios locales. Según su ubicación geográfica, recomendamos que seleccione: .

También puede seleccionar uno de estos países/idiomas:

Cómo obtener el mejor rendimiento

Seleccione China (en idioma chino o inglés) para obtener el mejor rendimiento. Los sitios web de otros países no están optimizados para ser accedidos desde su ubicación geográfica.

América

- América Latina (Español)

- Canada (English)

- United States (English)

Europa

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)