Implementing Motor and Power Electronics Control on an FPGA-Based SoC

Overview

Wide-band gap devices such as SiC and GaN power electronics let converters and drives operate at higher switching frequencies than Si devices. Faster switching means smaller passive components and less power consumption. An FPGA-based system-on-a-chip (SoC) can execute algorithms at the speeds needed to support motor and power control applications using these devices.

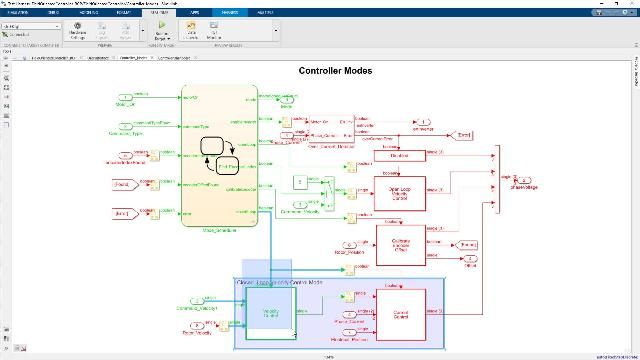

Simulink, Simscape Electrical, and HDL Coder let you design your control algorithms and deploy them as HDL code to FPGA SoCs. With these tools, you can use simulation to test your algorithm against a model of a motor and inverter or power converter, letting you perform design tradeoffs and analyze system performance. As well as generating HDL code, HDL Coder provides a workflow advisor to help you evaluate your HDL architecture and implementation, highlight critical paths, and generate hardware resource utilization estimates. Using SoC Blockset lets you simulate memory and internal and external connectivity, as well as scheduling and OS effects, using generated test traffic or real I/O data.

Highlights

In this webinar, MathWorks engineers will show:

- Automatically deploying code to both processor and FPGA in an SoC

- Partition a motor control algorithm between processor and FPGA in the same model

- Generate c-code and hdl from the Simulink Model

About the Presenters

Shang-Chuan Lee is a Senior Application Engineer specializing in Electrical system and Industrial Automation industries and has been with the MathWorks since 2019. Her focus at the MathWorks is on building models of electric motor and power conversion systems and then leveraging them for control design, hardware-in-the-loop testing and embedded code generation. Shang-Chuan holds a Ph.D from the University of Wisconsin-Madison(WEMPEC) specializing in motor controls, power electronics, and real-time simulation.

Joel Van Sickel is an Application Engineer focused on power electronics and FPGA based workflows for both HIL and controls. He was a hardware design engineer prior to coming to MathWorks in 2016. He received his Ph.D. focused on power systems and controls from the Pennsylvania State University in 2010.

Recorded: 22 Feb 2022